the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Overview of the main radiation transport codes

Nikolaos Schetakis

Rodrigo Crespo

José Luis Vázquez-Poletti

Mariano Sastre

Luis Vázquez

Alessio Di Iorio

Accurate predictions of expected radiation dose levels on Mars are often provided by specific radiation transport codes that have been adapted to space conditions. Unsurprisingly, several of the main space agencies and institutions involved in space research and technology tend to work with their own in-house radiation codes. We present the codes that are related to the simulation of the radiation on Mars' surface under different scenarios. All of these codes have similar fields of application, but they differ with respect to several aspects, including the energy range and types of projectiles considered as well as the models of nuclear reactions considered.

The manned exploration and habitation of Mars is of great importance to humankind. While Earth's magnetic field and atmosphere protect us from cosmic radiation, Mars has no such a protective magnetosphere. Furthermore, due to its thin atmosphere, instrumentation (particularly electronics) and astronauts are eventually exposed to considerably harmful levels of radiation. Over the course of about 18 months, the Mars Odyssey probe detected ongoing radiation levels that were 2.5 times higher than what astronauts experience on the International Space Station. Moreover, the Mars rover “Curiosity” has allowed us to finally calculate an average radiation dose over the 180 d journey: it is the equivalent of 24 computerized axial tomography (CAT) scans. A more detailed description of the Mars space radiation environment will be a critical consideration for every part of the astronauts' daily lives.

In this paper, we present the most commonly used radiation transport codes as well as the main differences between them. Finally, we propose a cloud computing solution with a clear advantage in this area. Cloud computing permits the user to adapt the infrastructure to the specific needs of each task in order to improve efficiency, which is of great importance in an environment with a limited power supply.

2.1 HZETRN2015 (NASA)

HZETRN, High charge(Z) and Energy TRaNsport, is a deterministic code (Wilson et al., 2015) developed by NASA that has been used for calculating three-dimensional transport in user-defined combinatorial or ray-trace geometry. It is widely considered to provide an analysis of the radiation levels, as it is able to consider a wide range of shielding scenarios. Thus, it considers relevant issues such as solar particle events (SPEs) or galactic cosmic rays (GCRs) as well as considering low Earth orbit (LEO) environments. In more detail, HZETRN is not actually a code but rather a suite of codes. With these codes, the Boltzmann transport equation is solved (numerically) using the appropriate approximations, which, in this case, are the continuous slowing down and straight-ahead approximations. HZETRN has experienced permanent evolution for nearly 30 years, with its initial version based on a NASA Langley Research Center team headed by John W. Wilson. In addition, the extension of HZETRN to include pions, muons, electrons, positrons and gammas has been developed and used (Norman et al., 2013).

Previous work has validated HZETRN for secondary particle flux in Earth's atmosphere (Norman et al., 2013). In addition, Slaba et al. (2013) compared HZETRN on a minute-by-minute basis to International Space Station dosimeter measurements and found good agreement. HZETRN has also been extensively benchmarked against fully three-dimensional Monte Carlo codes for slab geometries (Heinbockel et al., 2011; Lin et al., 2012), with the results showing that HZETRN generally supports the Monte Carlo codes results (to the extent that they agree with each other globally).

2.2 OLTARIS

OLTARIS, On-Line Tool for the Assessment of Radiation In Space, is a space radiation analysis tool available on the World Wide Web (https://oltaris.nasa.gov/, last access: 17 October 2020). It can be used to study the effects of space radiation for various spacecraft and mission scenarios involving humans and electronics. Transport is based on the HZETRN transport code, and the input nuclear physics model is NUCFRG (Wilson et al., 1995).

2.3 SHIELD (ROSCOSMOS)

SHIELD is a Monte Carlo code developed by ROSCOSMOS, the Russian state corporation in charge of space flights and cosmonautics programmes. The SHIELD transport code (Dementyev et al., 1999) has been used for several space applications (Gusev et al., 1994; Spjeldvik et al., 1998; Dementyev et al., 1998; Spjeldvik et al., 1996; Bogomolov et al., 2000; Panasyuk et al., 2000; Kuznetsov et al., 2001; Getselev et al., 2004). SHIELD code is tuned for space shielding and environmental applications and can be used for radiation effect simulation for long-term spacecraft missions.

The main applications of this code are as follows:

-

study of the “spallation” process in heavy targets under proton beam irradiation, including the generation of neutrons, energy deposition and the formation of nuclides in the target;

-

optimization of the targets of pulsed neutron sources on neutron yield;

-

study of the direct transmutation of fission products by the proton beam;

-

simulation of heavy ion beam interaction with extended targets and applications to proton and ion beam therapy;

-

optimization of the pion-producing targets;

-

study of primary radiation damage of structural materials under primary proton beam and secondary radiations;

-

calculations of radiation fluxes behind the shielding from galactic and solar cosmic rays and modelling of secondary neutron fields inside a space orbital station;

-

study of the accumulation of cosmogenic isotopes in iron meteorites;

-

study of background conditions in underground experimental halls, given by hadron cascades in the rock;

-

fluctuations of neutron yield in a hadron calorimeter under a single beam of particles;

-

spreading of neutrons in the neutron moderation spectrometer (“leaden cube”).

2.4 GEANT/PLANETOCOSMICS (ESA)

GEANT4 (Agostinelli et al., 2003; Allison et al., 2006), developed by the European Space Agency (ESA), is also not just a single radiation code; instead, it can be considered a toolkit that can calculate how the different particles are transported through matter. It is also based on Monte Carlo methods.

In addition, PLANETOCOSMICS (http://cosray.unibe.ch/laurent/planetocosmics/, last check: 17 October 2020) is an application linked to GEANT4 that is able to provide a description of several interesting features of a planetary body, including its geometric figures, the soil, the atmosphere or the magnetosphere. In particular, it works for the planet that we are interested in: Mars. PLANETOCOSMICS is particularly useful for two reasons: (1) it serves to calculate the transport of any arbitrary primary particles that can be found either in or through these planetary environments and (2) it can be employed to obtain an estimation of the number of secondary particles generated at a specific time. Thus, using GEANT4 and PLANETOCOSMICS, we can obtain a great number of the so-called physics lists that describe the particle–matter interactions.

PLANETOCOSMICS (Desorgher et al., 2005) can also be considered a framework for these simulations, as it is based on GEANT4 and is capable of computing physical interactions between GCR and planets like Mercury, the Earth or Mars. The group of physical interactions typically included are electromagnetic and hadronic interactions. It is possible to consider each planetary body's atmosphere, its soil and the presence (or absence) of a magnetic field. Regarding the latter, different magnetic field and atmospheric models are available for each planet. The code has been developed so that it can easily be updated.

There are many applications for this code. Some of the main applications are as follows:

-

computing the particle fluxes that result from GCR–planet interaction – notice that this is done at user-defined altitudes, atmospheric depths and in the soil;

-

computing the energy that is deposited by GCR showers in the planet's atmosphere and in the soil;

-

studying the quasi-trapped particle population;

-

simulating using the appropriate computational power to learn about the propagation of charged particles in the planet's magnetosphere;

-

computing the cut-off rigidity, which is often done considering the position and the direction of incidence;

-

visualizing the magnetic field lines. Linked to this point, both the primary and secondary particles trajectories in the planet environment can be seen.

2.5 FLUKA (CERN)

FLUKA (Ferrari et al., 2005; Battistoni et al., 2007), developed by the European Organization for Nuclear Research (CERN), is another multi-particle Monte Carlo transport code. Consequently, it is able to deal with electromagnetic and hadronic showers up to very high energies (100 TeV). Therefore, it is well known when it comes to radioprotection and detector simulation studies.

The initial version of FLUKA was developed more than 50 years ago, in 1964. At that time, CERN required Monte Carlo codes for high-energy beams in order to apply them to many accelerator-related tasks; thus, Johannes Ranft began developing codes for these applications. FLUKA was officially named in approximately 1970, when the first attempts to predict calorimeter fluctuations were done on an event-by-event basis: the code is actually named after the cascades that originate in this context (FLUKA – FLUctuating KAskades). The present code (Fasso et al., 1997; Ferrari et al., 1996; Gandini et al., 1998) is basically the heir to the code initiated in 1990 in order to develop an adequate tool that could work for the Large Hadron Collider (LHC). Nowadays, this code is very popular at many laboratories, including, of course, CERN. FLUKA is actually the tool currently used for nearly all of the radiation calculations and the neutrino beam studies developed by CERN.

A key aspect of FLUKA is its ability to represent transport as well as interactions with all of the elementary hadrons, with different ions (both heavy and light), and with photons and electrons within a wide energy range, extending up to 104 TeV for all particles and down to thermal energies for neutrons (Fasso et al., 2005; Battistoni et al., 2006; Albrow et al., 2007). Due to the code's in-built capabilities, the particle fluences, yields and energy deposition can be scored over arbitrary three-dimensional meshes. This can be done both on an event-by-event basis and averaged over a large number of records. Moreover, benchmarking of FLUKA has been widely performed with respect to the available accelerator and GCR experimental data. The beam energies taken into account range from a few mega electron volts (lower limit) to GCR energies (upper limit). Considering an arbitrary solar activity modulation parameter, the spectra can be modulated within FLUKA. If past dates are the target, we can just use the current solar activity obtained from the ground-based neutron counters' measurements.

Regarding the types of interactions covered by FLUKA, the modern version of the code can be used to treat all of the components of radiation fields within the following approximate energy ranges:

-

0–100 TeV for hadron–hadron and hadron–nucleus interactions;

-

1 keV–100 TeV in the case of electromagnetic interactions;

-

0–20 MeV for charged particle transport–ionization energy loss neutron multi-group transport interactions.

Moreover, analogue or biased calculations are also possible. Finally, the range from 0 to 10 000 TeV n−1 for nucleus–nucleus and hadron–nucleus interactions is still under development.

2.6 PHITS

The Particle and Heavy Ion Transport code System (PHITS) is a general purpose Monte Carlo particle transport simulation code developed and verified as part of a collaboration between several Japanese organizations: Japan Atomic Energy Agency (JAEA), Research Institute for Research and Technology (RIST), High Energy Accelerator Research Organization (KEK) and several other institutes (Niita et al., 2010; Iwamoto et al., 2007). It can deal with the transport of all particles over wide energy ranges, using several nuclear reaction models and nuclear data libraries. PHITS is used in the fields of accelerator technology, radiotherapy, space radiation and in many other fields related to particle and heavy ion transport phenomena.

When simulating the transport of charged particles and heavy ions, knowledge of the magnetic field is sometimes necessary to estimate beam loss, heat deposition in the magnet and beam spread. PHITS can provide arbitrary magnetic fields in any region of the set-up geometry. It is possible to use PHITS to simultaneously simulate the trajectories of charged particles in a field as well as the collisions and ionization process that they experience.

2.7 HETC-HEDS

The High Energy Transport Code – Human Exploration and Development of Space (HETC-HEDS) computer code is another Monte-Carlo-based method. It has been specifically designed to provide solutions to radiation problems (Gabriel et al., 1995), mainly those that involve the secondary particle fields typically produced by the space radiation interaction with the various types of shielding and equipment involved in the different missions. HETC-HEDS is a three-dimensional generalized radiation transport code that is able to analyse and handle the radiation fields that might affect critical human organs in the context of a potential crewed spaceflight. Therefore, we refer to tissues such as those that compose the central nervous system or the bone marrow. It is possible to apply this code to a wide range of particle species and energies, which is very helpful. Among other elements, HETC-HEDS contains a heavy ion collision event generator that can track nuclear interactions and perform data analysis (statistics). In addition, it is capable of simulating particle interactions, which is a crucial issue with respect to solving this type of problem. To do so, it uses a pseudo-random number generator; in combination with the appropriate physics characterization, it is possible to record the trajectories followed by both the primary and the secondary particles involved in the nuclear collision of GCR and solar event particles. A typical application of this method would be the estimation of how these particles interact with matter, including the shielding material the equipment from crewed spaceflights may have, biological organisms (such as astronauts) and the electronic equipment that a mission needs to fly with. This code considers nearly all of the particles that are typically required for space radiation calculations. For example, HETC-HEDS considers the interactions of protons, neutrons, π+, π−, μ+, μ−, light ions and heavy ions. In the model, arbitrary position, angle and energy values are assigned throughout a spatial boundary of interest. This Monte Carlo code tracks each and every particle in a cascade until one of the following issues occurs: (1) a nuclear collision, (2) absorption, (3) decay or escape from the spatial boundary or (4) elimination as a result of crossing a domain variable cut-off. Thus, it is necessary to focus on the nuclear reactions and processes occurring. In this case, they are accounted for using physical models so that the main issues (energy loss, range straggling, Coulomb scattering, etc.) are properly handled. Naturally, the energy and nucleon conservation principles should not be violated when the collisions (elastic and nonelastic) are computed. A more detailed explanation of the inner workings and benchmarking of HETC-HEDS is given in the following references: Townsend et al. (2005), Miller and Townsend (2004, 2005), Charara et al. (2008) and Heinbockel et al. (2011). However, HETC-HEDS, as noted previously for HZETRN, does not follow the liberated electrons (delta rays) produced by Coulomb interactions. Thus, the code calculates the energy lost using the difference between the particle energies entering and exiting a target component (true linear energy transfer) but not the actual energy deposited.

2.8 COMIMART-MC

The COMIMART-MC, COmplutense and Michigan Mars Radiative Transfer model – Monte Carlo, is a Monte Carlo code to calculate solar irradiance that reaches the surface of Mars in the spectral range from the ultraviolet (UV) to the near infrared (NIR), and it has been developed and validated under different scenarios (Retortillo et al., 2015, 2016, 2017). The model includes up-to-date wavelength-dependent radiative properties of dust, water, ice clouds and gas molecules. It enables the characterization of the radiative environment in different spectral regions under a wide variety of conditions.

It is worth exploring the role of dust in the Martian atmosphere (Retortillo et al., 2017), as it is quite a relevant aspect to consider when trying to reach the goal of improving these radiation transport codes. This element may play a very important role under certain circumstances, particularly because a dust storm may be so intense that it affects the whole planet. In these cases, the effective radius of the dust particles needs to be very well characterized in order to provide an accurate estimation of several atmospheric properties, including the opacity, scattering and albedo among others.

In this model, the dust effective radii are employed so that the radiative properties are properly characterized. By using the refractive indexes for different particle sizes and shapes, extinction efficiencies, single scattering, albedos and scattering phase functions are provided. The main assumption consists of accepting that all of the particles have a cylindrical shape, with a height and diameter of equal magnitude, following Wolff et al. (2009, 2010).

As previously mentioned, most of the codes considered by agencies and organizations are based on Monte Carlo codes. A non-exhaustive list of these Monte Carlo codes is given in the following:

-

ETRAN (Berger, Seltzer; NIST 1978);

-

EGS4 (Nelson, Hirayama, Rogers; SLAC 1985), https://www.slac.stanford.edu/egs, last access: 17 October 2020;

-

EGS5 (Hirayama et al.; KEK-SLAC 2005), http://rcwww.kek.jp/research/egs/egs5.html, last access: 17 October 2020;

-

EGSnrc (Kawrakow and Rogers; NRCC 2000), https://nrc-cnrc.github.io/EGSnrc/, last access: 17 October 2020;

-

Penelope (Salvat et al; U. Barcelona 1999), http://www.oecd-nea.org/lists/penelope.html, last access: 17 October 2020;

-

MARS (James and Mokhov; FNAL), https://mars.fnal.gov/, last access: 17 October 2020;

-

MCNPX/MCNP5 (LANL 1990), https://mcnpx.lanl.gov/, last access: 17 October 2020.

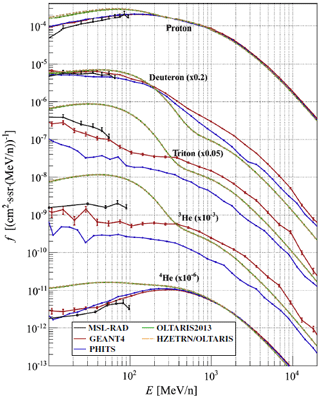

As there have been several studies comparing transport codes with one another (Norbury et al., 2017; Sihver et al., 2008; Porter et al., 2014), it is worth focusing on the wide range of the energy spectrum analysed. The largest differences from one transport code to another occur below the several hundred mega electron volt region. This may be due to the fact that every code considers a different nuclear model. At the same time, we organized the structure for large and massive simulations in the framework of cloud computing (Vázquez et al., 2019), which is partly explained in the following Sect. 4.

On the other hand, differences are found to be significantly more pronounced for thin shielding conditions, as transport processes do not play such a relevant role in these cases. As discussed by Matthiä et al. (2016), a maximum 20 % difference from one code to another is expected. Following Wimmer-Schweingruber et al. (2016), the spectra on the Martian surface can be found in Fig. 1. These data were collected from the Radiation Assessment Detector (RAD) onboard the Mars Science Laboratory (MSL) rover Curiosity on the surface of Mars between 2012 and 2013. Data are compared with calculations from different model simulations for the energy range between 10 MeV n−1 and 20 GeV n−1.

Figure 1Spectra on the Martian surface measured between 2012 and 2013 by MSL-RAD (Ehresmann et al., 2014) and calculated for the same period using different simulation tools for the energy range from 10 MeV n−1 to 20 GeV n−1.

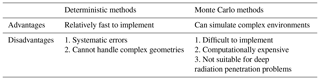

According to the methods considered, radiation transport codes can be classified into deterministic methods (HZETRN) and Monte Carlo methods (SHIELD, GEANT4, FLUKA, PHITS and HETC-HEDS). In the following, we will analyse these methods in a little more detail.

Deterministic methods are computationally less demanding. Their main disadvantage is that they can only be used in cases where transport equations can be solved analytically. Thus, this method is accurate for simple shielding geometries. Furthermore, deterministic codes suffer from systematic errors due to the need for phase space discretization. Monte Carlo methods, in contrast, are typically more difficult to implement, usually require more processing power and unfortunately cannot produce accurate results in deep radiation penetration problems. Nevertheless, they can simulate complex shielding geometries (Oliveira and Oliveira, 2005), which can be an advantage in certain situations. Globally, we can consider that deterministic and Monte Carlo methods complement each other and provide accurate results in space-related applications. On the one hand, deterministic methods can be used when working with limited computational resources (e.g. Mars rover, orbiters, etc.) or on the early phase of a space shielding design, where the geometric requirements are still unknown. On the other hand, Monte Carlo methods perform better in the latest shielding design stage, in order to obtain fine-tuning. In Table 1, we present the main advantages and disadvantages of both deterministic and Monte Carlo methods.

The execution of the tasks necessary to process the radiation data and perform the calculations of the models requires a high computational processing capacity. A highly scalable system is necessary for the execution of distributed processes in order to reduce calculation time and obtain results with high accuracy.

Cloud computing is based on the use of different computing resources (CPU, memory, disc, network, etc.) that can be scaled on demand and used together to execute different tasks (Armbrust et al., 2010). This methodology provides a clear advantage in this area thanks to its dynamism when it comes to managing computing resources. Its elasticity permits the user to adapt the infrastructure to the specific needs of each task to improve efficiency (Dillon et al., 2010).

Currently, cloud computing is very advanced and widespread, and there are many cloud infrastructure providers, such as Amazon Web Services, Google Cloud, IBM Softlayer and Microsoft Azure among others.

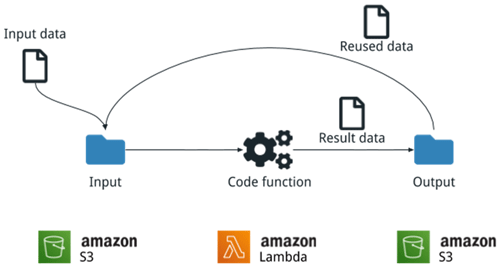

In addition, new cloud computing paradigms have been developed in recent years to adapt to the high demand for new technologies. One of these is serverless computing (Baldini et al., 2010), a function-as-a-service computing model in which infrastructure management is performed entirely by cloud providers, so that the only element that is required to execute processing is the source code of the tasks to be executed (Vasquez-Poletti et al., 2018).

Serverless computing is very interesting for the execution of distributed tasks that are necessary for the processing of radiation data. There have been many studies of the advantages of serverless computing in other research areas in the literature (Crespo-Cepeda et al., 2019; Feng et al., 2018; Yan et al., 2018). The use of serverless technology has proven useful in many aspects, such as the simplification of the configuration due to not having to manage complex infrastructure (Villamizar et al., 2016), which slows the research process down. Furthermore, in the context of massive code parallel execution, dynamic and elastic scaling of this solution is assured, as it adapts according to the capabilities required by each of the tasks at all times (Raman et al., 1998).

Last but not least, the serverless model offers reduced execution costs because it is no longer necessary to hire a computing infrastructure. In fact, cost is limited to the execution time of each process (Adzic et al., 2017).

For example, as shown in Fig. 2, the Amazon AWS cloud infrastructure can be used to execute a generic code that needs some input data and produces data results to be stored. The architecture is based on two Amazon AWS services: AWS Lambda, the serverless computing platform responsible for processing the code, and Amazon S3, the object storage service where the input data are uploaded and the result data is saved.

We have shown that there are several radiative transfer codes currently employed by the different space agencies and institutions, and they are mainly developed in-house by the respective institutes. These codes are useful for specific applications in each case, as they can simulate the surface radiation on Mars considering a variety of scenarios. This code taxonomy proves that all of the codes can be considered in similar fields; therefore, their application under most of the conditions is possible. However, as the codes differ with respect to key aspects, such as the energy range, the types of projectiles considered or the models of nuclear reactions considered, all of them have a specific situation in which they are the most appropriate.

A deep comparison of the computation time required by each of the codes as well as the consideration of the difference between cloud computing and traditional computing is suggested as a future line of research. Using such studies, the performance of the codes and techniques can be evaluated and the available resources can be optimized.

LV and ADI conceived the idea for the study and contributed to the formulation of the objectives and methodology of the research; they were also involved in the administration and supervision of the project as well as in funding acquisition and the implementation of the research schedule, which led to this publication. NS and MS worked out the technical details for Sections 1,2 and 3 and wrote the corresponding sections. RC and JLVP provided the distributed/cloud computing content (i.e. Sect. 4). All authors contributed to the investigation processes, were involved in the interpretation of the results and contributed to writing the final paper.

The authors declare that they have no conflict of interest.

This research has been carried out in the framework of the IN-TIME project, which is funded by the European Commission under the Horizon 2020 Marie Skłodowska-Curie actions Research and Innovation Staff Exchange (RISE; grant agreement no. 823934). The authors are thankful to the institutions and individual members who participated in the project. Through other research projects, the Spanish Government (Ministerio de Economía, Industria y Competitividad and Ministerio de Ciencia e Innovación) additionally supported Luis Vázquez (ESP2016-79135-R), Mariano Sastre (PCIN‐2014-013-C07-04, PCIN-2016-080, CGL2016‐78702-C2-2-R and PID2019‐105306RB‐I00) and JLVP (RTI2018-096465-B-I00, EDGECLOUD). José Luis Vázquez-Poletti was also supported by the Madrid Regional Government (project EDGEDATA, S2018/TCS-4499). We would like to thank the associate editor and two anonymous reviewers for their thoughtful comments and efforts towards improving our paper.

This research has been supported by the European Commission, Horizon 2020 framework programme (grant no. 823934).

This paper was edited by Ralf Srama and reviewed by two anonymous referees.

Adzic, G. and Chatley, R.: Serverless computing: economic and architectural impact, in: Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering, 884–889, 2017.

Agostinelli, S., Allison, J., Amako, K., et al.: GEANT4 – A simulation Toolkit, Nucl. Instrum. Meth. A, 506, 250–303, https://doi.org/10.1016/S0168-9002(03)01368-8, 2003.

Albrow, M. and Raja, R.: Hadronic Shower Simulation Workshop (AIP Conference Proceedings/High Energy Physics), American Institute of Physics; 2007th Edition (April 4, 2007), ISBN-13: 978-0735404014, 31–49, 2007.

Allison, J., Amako, K., Apostolakis J., et al.: Geant4 – A simulation toolkit, IEEE Trans. Nucl. Sci., 53, 270–278, 2006.

Armbrust, M., Fox, A., Griffith, R., Joseph, A. D., Katz, R., Konwinski, A., and Zaharia, M.: A view of cloud computing, Commun. ACM, 53, 50–58, 2010.

Baldini, I., Castro, P., Chang, K., Cheng, P., Fink, S., Ishakian, V., Suter, P.: Serverless computing: Current trends and open problems, in: Research Advances in Cloud Computing, Springer, Singapore, 1–20, 2010.

Battistoni, G., Muraro S., Sala, P. R., Cerutti, F., Ferrari, A., Roesler, S., Fasso, A., and Ranft, J.: The FLUKA code: Description and benchmarking, Proceedings of the Hadronic Shower Simulation Workshop 2006, Fermilab, 6–8 September 2006.

Battistoni, G., Muraro, S., Sala, P. R., Cerutti, F., and Ferrari, A.: The FLUKA code: description and benchmarking, AIP Conference Proceeding, 896, 31–49, 2007.

Bogomolov, A. V., Dementyev, A. V., Kudryavtsev, M. I., Myagkova, I. N., Pavlovich Ryumin, S., Svertilov, S. I., and Sobolevsky, N.: Fluxes and energy spectra of secondary neutrons with energies >20 MeV as measured by the MIR orbital station, the SALYUT-7-KOSMOS-1686 orbital complex, and the KORONAS-I satellite: Comparison of experimental data and model calculations, Cosmic Res., 38, 28–32, 2000.

Charara, M. Y., Townsend, W. L., Gabriel, A. T., and Zeitlin, C.: HETC-HEDS code validation using laboratory beam energy loss spectra Data, IEEE Trans. Nucl. Sci., 55, 3164–3168, https://doi.org/10.1109/TNS.2008.2006607, 2008.

Crespo-Cepeda, R., Agapito, G., Vazquez-Poletti, J. L., and Cannataro, M.: Challenges and Opportunities of Amazon Serverless Lambda Services in Bioinformatics, in: Proceedings of the 10th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics, 663–668, 2019.

Dementyev, A. V. and Sobolevsky, N. M.: SHIELD – Universal Monte Carlo Hadron Transport Code: Scope and Applications. Space Radiation Environment Modeling: New Phenomena and Approaches, 7–9 October 1997, Workshop Abstracts, MSU, Moscow, 1997, p. 4.4, Radiation Measurements, 30, 553, 1999.

Dementyev, A. V., Nymmik, R. A., and Sobolevsky, N. M.: Secondary Protons and Neutrons Generated by Galactic and Solar Cosmic Ray Particles behind 1–100 g/cm2 Aluminium Shielding, Adv. Space Res., 211793, https://doi.org/10.1016/S0273-1177(98)00069-6, 1998.

Desorgher, L., Flückiger, E. O., and Gurtner, M.: The PLANETOCOSMICS Geant4 application, 36th COSPAR Scientific Assembly, Held 16–23 July 2006, in Beijing, China, Meeting abstract from the CDROM, #2361, 2005.

Dillon, T., Wu, C., and Chang, E.: Cloud computing: issues and challenges, in: 2010 24th IEEE international conference on advanced information networking and applications, 27–33, 2010.

Fasso, A., Ferrari, A., Ranft, J., and Sala, P. R.: New developments in FLUKA modelling hadronic and EM interactions Proc. 3rd Workshop on Simulating Accelerator Radiation Environments, KEK, Tsukuba (Japan) 7–9 May 1997, edited by: Hirayama, H., KEK Proceedings 97-5, 32–43, 1997.

Fasso, A., Ferrari, A., Ranft, J., and Sala, P. R.: FLUKA: a multi-particle transport code, CERN-2005-10, INFN/TC 05/11, SLAC-R-773, 2005.

Feng, L., Kudva, P., Da Silva, D., and Hu, J.: Exploring serverless computing for neural network training, in: 2018 IEEE 11th International Conference on Cloud Computing (CLOUD), 334–341, 2018.

Ferrari, A. and Sala, P. R.: Proc. of the “Workshop on Nuclear Reaction Data and Nuclear Reactors Physics, Design and Safety”, International Centre for Theoretical Physics, Miramare-Trieste, Italy, 15 April–17 May 1996.

Ferrari, A., Sala, P. R., Fasso, A., and Ranft, J.: FLUKA: A multi-particle transport code, Stanford Linear Accelerator Center, Stanford University, Stanford, CA 94309, October 2005.

Gabriel, T. A., Bishop, B. L., Alsmiller, F. S., Alsmiller, R. G., and Johnson, J. O.: CALOR95: A Monte Carlo Program Package for the Design and Analysis of Calorimeter Systems, Oak Ridge National Laboratory Technical Memorandum 11185, 1995.

Gandini, A. and Reffo, G.: Nuclear Reaction Data and Nuclear Reactors, Proceedings of the Workshop, ICTP, Trieste, Italy, 15 April, https://doi.org/10.1142/3319, Vol. 2, p. 424, 1998.

Getselev, I., Rumin, S., Sobolevsky, N, Ufimtsev, M., and Podzolko, M.: Absorbed Dose of Secondary Neutrons from Galactic Cosmic Rays inside International Space Station, COSPAR02-A-02485; F2.5-0015-02, F046, 2004.

Gusev, A. A., Martin, I. M., Pugacheva, G. I., and Sobolevsky, N. M.: Model of Secondaries Produced in Craft and Spacecraft by Neutrons and Protons of Cosmic Rays, Proc. of 8th International Conference on Radiation Shielding (ICRS 8), USA, p. 619, 1994.

Heinbockel, H. J., Slaba, C. T., Tripathi, K. R., Blattnig, R. S., Norbury, W. J., Badavi, F. F., Townsend, W. L., Handler, T., Gabriel, A. T., Pinsky, S .L., Reddell, B., and Aumann R. A.: Comparison of the transport codes HZETRN, HETC and FLUKA for galactic cosmic rays, Adv. Space Res., 47, 1089–1105, https://doi.org/10.1016/j.asr.2010.11.013, 2011.

Iwamoto, Y., Niita, K., Sakamoto, Y., Sato, T., and Matsuda, N.: Validation of the event generator mode in the PHITS code and its application, International Conference on Nuclear Data for Science and Technology, Nice, France, from April 22 to April 27, https://doi.org/10.1051/ndata:07417, 2007.

Kuznetsov, N. V., Nymmik, R. A., Panasyuk, M. I., and Sobolevsky, N. M.: Equivalent Dose During Long-Term Interplanetary Missions Depending on Solar Activity Level, American Institute of Physics Conference Proceedings, Space Technology and Applications International Forum 2001, 11–14 February 2001, Albuquerque, New Mexico, USA, edited by: Mohamed, S. V., Vol. 552, N.Y., Spinger-Verlag, 1240–1245, https://doi.org/10.1063/1.1358079, 2001.

Lin, Z. W., Adams, J. H., Barghouty, A. F., Randeniya, S. D., Tripathi, T. K., Watts, J. W., and Yepes, P. P.: Comparisons of several transport models in their predictions in typical space radiation environments, Adv. Space Res., 49, 797–806, 2012.

Matthiä, D., Ehresmann, B., Lohf, H., Kohler, J., Zeitlin, C., Appel, J., Tatsuhiko, S., Slaba, T., Martin, C., Berger, T., Boehm, E., Boettcher, S., Brinza, E. D., Burmeister, S., Guo, J., Hassler, M. D., Posner, A., Rafkin, R. C., Gunther, R., and Wilson, W. J., and Wimmer-Schweingruber, F. R.: The Martian surface radiation environment – a comparison of models and MSL/RAD measurements, J. Space Weather Space Clim., 6, 1–17, https://doi.org/10.1051/swsc/2016008, 2016.

Miller, M. T., Townsend, W. L., Gabriel, A. T., and Handler, T.: HETC-HEDS fragment fluence predictions compared with high-energy heavy ion beam laboratory data, Am. Nucl. Soc., 91, p. 707, 2004.

Niita, K., Matsuda, N., Iwamoto, Y., Iwase, H., Sato, T., Nakashima, H., Sakamoto, Y., and Sihver, L.: PHITS: Particle and Heavy Ion Transport code System, Version 2.23, JAEA-Data/Code 2010-022, 2010.

Norbury, J. W., Slaba, T. C., Sobolevsky, N., and Reddel, B.: Comparing HZETRN, SHIELD, FLUKA and GEANT transport codes, Life Sci. Space Res., 14, 64–73, https://doi.org/10.1016/j.lssr.2017.04.001, 2017.

Norman, R. B., Slaba, T. C., and Blattnig, S. R.: An Extension of HZETRN for Cosmic Ray Initiated Electromagnetic Cascades, Adv. Space Res., 51, 2251–2260, https://doi.org/10.1016/j.asr.2013.01.021, 2013.

Oliveira, A. D. and Oliveira, C.: Comparison of deterministic and Monte Carlo methods in shielding design, Radiat. Prot. Dosim., 115, 254–257, https://doi.org/10.1093/rpd/nci187, 2005.

Panasyuk, M. I., Bogomolov, A. V., and Bogomolov, V. V.: Background Fluxes of Neutrons in Near-Earth Space: Experimental Results of SINP, Preprint 2000-9/613, Skobeltsyn Institute of Nuclear Physics MSU, Moscow, 2000.

Porter, J. A., Townsend, L., Spence, H., Golightly, M., Schwadron, N., Kasper, J., Case, A. W., Blake, J. B., and Zeitlin, C.: Radiation environment at the Moon: Comparisons of transport code modeling and measurements from the CRaTER instrument, Space Weather, 12, 329–336, https://doi.org/10.1002/2013SW000994, 2014.

Raman, R., Livny, M., and Solomon, M.: Matchmaking: Distributed resource management for high throughput computing, in: Proceedings, The Seventh International Symposium on High Performance Distributed Computing (Cat. No. 98TB100244), 140–146, 1998.

Retortillo, A. V., Valero, F., Vázquez, L., and Martinez, G. M.: A model to calculate solar radiation fluxes on the Martian surface, J. Space Weather Space Clim., 5, A33, https://doi.org/10.1051/swsc/2015035, 2015.

Retortillo, A. V., Lemmon, M. T., Martinez, G. M., Valero, F., Vasquez, L., and Martin, M. L.: Seasonal and interannual variability of solar radiation at Spirit, Opportunity and Curiosity landing sites, Física de la Tierra, 28, 111–127, https://doi.org/10.5209/rev_FITE.2016.v28.53900, 2016.

Retortillo, A. V., Martinez G. M., Renno, N. O., Lemmon, M. T., and Torre-Juárez, M. T.: Determination of dust aerosol particle size at Gale Crater using REMS UVS and Mastcam measurements, Geophys. Res. Lett., 44, 3502–3508, https://doi.org/10.1002/2017GL072589, 2017.

Sihver, L., Mancusi, D., Niita, K., Sato, T., Townsend, L., Farmer, C., Pinsky, L., Ferrari, A., Cerutti, F., and Gomes, I.: Benchmarking of calculated projectile fragmentation cross-sections using the 3-D, MC codes PHITS, FLUKA, HETC-HEDS, MCNPX_HI, and NUCFRG2, Acta Astronaut., 63, 865–877, https://doi.org/10.1016/j.actaastro.2008.02.012, 2008.

Slaba, T. C., Blattnig, S. R., Reddell, B., Bahadori, A., Norman, R. B., and Badavi, F.: Pion and electromagnetic contribution to dose: Comparisons of HZETRN to Monte Carlo results and ISS data Advances, Space Res., 52, 62, https://doi.org/10.1016/j.asr.2013.02.015, 2013.

Spjeldvik, W., Pugacheva, G. I., Gusev, A. A., Martin, I. M., and Sobolevsky, N. M.: Sources of inner Radiation Zone Energetic Helium Ions: cross-field transport versus in-situ nuclear reactions, Adv. Space Res., 21, 1675, https://doi.org/10.1016/S0273-1177(98)00013-1, 1998.

Townsend, L. W., Miller, T. M., and Gabriel, T. A.: HETC radiation transport code development for cosmic ray shielding applications in space, Radiation Protection Dosimetry, 116, 135–139, https://doi.org/10.1093/rpd/nci091, 2005.

Vázquez, L., Vázquez-Poletti, J. L., Sastre, M., Quitián, L., Martín, M. L., Valero, F., Llorente, I. M., and di Iorio, A.: The In-Situ Instrument for Mars and Earth Dating Applications (IN-TIME) project, Boletín electrónico de la SEMA, 23, 59–66, 2019.

Vazquez-Poletti, J. L., Llorente, I. M., Hinsen, K., and Turk, M.: Serverless computing: from planet mars to the cloud, Comput. Sci. Engin., 20, 73–79, 2018.

Villamizar, M., Garces, O., Ochoa, L., Castro, H., Salamanca, L., Verano, M., and Lang, M.: Infrastructure cost comparison of running web applications in the cloud using AWS lambda and monolithic and microservice architectures, in: 2016 16th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGrid), 179–182, 2016.

Wilson, J. W., Tripathi, R. K., Cucinotta, F. A., Shinn, J. L., Badavi, F. F., Chun, S. Y., Norbury, J. W., Zeitlin, C. J., Heilbronn, L., and Miller, J.: NUCFRG2: An Evaluation of the Semiempirical Nuclear Fragmentation Database, Technical Report, NASA Langley Technical Report Server, 1–50, 1995.

Wilson, J. W., Slaba, T. C., Badavi, F. F., and Reddell, D. B.: 3DHZETRN: Neutron leakage, in finite objects, Life Sci. Space Res., 7, 27–38, https://doi.org/10.1016/j.lssr.2015.09.003, 2015.

Wolff, M. J., Smith, M. D., Clancy, R. T., Arvidson, R., Kahre, M., Seelos, F., Murchie, S., and Savijärvi, H.: Wavelength dependence of dust aerosol single-scattering albedoas observedby the Compact Reconnaissance Imaging Spectrometer, J. Geophys. Res., 114, E00D04, https://doi.org/10.1029/2009JE003350, 2009.

Wolff, M. J., Clancy, R. T., Goguen, J. D., Malin, M. C., and Cantor, B. A.: Ultraviolet dust aerosol properties as observed by MARCI, Icarus, 208, 143–155, https://doi.org/10.1016/j.icarus.2010.01.010, 2010.

Yan, M., Castro, P., Cheng, P., and Ishakian, V.: Building a chatbot with serverless computing, in: Proceedings of the 1st International Workshop on Mashups of Things and APIs, 1–4, 2018.