the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Tipping point analysis helps identify sensor phenomena in humidity data

Valerie N. Livina

Kate Willett

Stephanie Bell

Humidity variables are important for monitoring climate. Unlike, for instance, temperature, they require data transformation to derive water vapour variables from observations. Hygrometer technologies have changed over the years and, in some cases, have been prone to sensor drift due to aging, condensation or contamination in service, requiring replacement. Analysis of these variables may provide rich insight into both instrumental and climate dynamics. We apply tipping point analysis to dew point and relative humidity values from hygrometers at 55 observing stations in the UK. Our results demonstrate these techniques, which are usually used for studying geophysical phenomena, are also potentially useful for identifying historic instrumental changes that may be undocumented or lack metadata.

- Article

(4509 KB) - Full-text XML

- BibTeX

- EndNote

Studying climate variables requires complex measurements and transformations of data. During the period of a given continuous record, observations may be derived from successive instruments. Sensors may undergo multiple technical changes, from replacements of instruments to drifts and degradation. Detecting such changes, especially during earlier periods of deployment, which were not fully documented, is an important task that contributes to better interpretation of data that forms climate records – in particular, to enable homogenisation processes that identify and address inconsistent data (Menne and Williams, 2009; Peterson et al., 1998). Homogenisation, detection of changes, and recovery of missing metadata also contribute to generic FAIR data principles: Findable, Accessible, Interoperable, and Reusable. In particular, it is necessary to produce robust long-term analyses to assess climate change, that changepoint detection at monthly timescales is relatively well established (Reeves et al., 2007).

There are various different methods for homogenisation of monthly data (Domonkos et al., 2021; Venema et al., 2012) and some benchmarking of daily data (Killick et al., 2022; Brugnara et al., 2023), while hourly homogenisation is still a missing link. As now we move into an era of high-frequency and global-coverage climate services, there is the need for robust daily and even hourly analyses to assess extreme events, their intensity and frequency (Trewin, 2013; Brugnara et al., 2023). This makes monitoring climate sensor networks and their quality particularly important.

It is known that during the course of observations in the past few decades, manual observations were replaced by automatic ones, and that many analogue instruments were replaced by digital ones at various stages of environmental observations. Some of these changes were not fully documented, or the records are not now accessible. Some instrument types have exhibited significant drift during period of service in between checks, electronic hygrometers being one example. Furthermore, a station may have moved (yet its metadata identifies it as at the same location) or instruments re-situated within the station grounds, or the local environment may have changed (urbanisation, city to airport, agricultural practices, afforestation or deforestation).

Therefore, it is important to analyse environmental records using suitable data techniques that are able to detect such artefacts and distinguish them from the natural phenomena being observed.

In the climatological community, there are various techniques developed for detection of outliers, which are used for verification of forecasts and data assimilation, i.e., combination of observations with short-range forecasts to estimate the current Earth system state.

In a series of ECMWF (European Centre for Medium-Range Weather Forecasts) publications (Dahoui et al., 2014, 2017, 2020; Dahoui, 2023), there were developed soft and hard limits for detection of sudden changes and slow drifts in climate statistics. These techniques were designed for in-house use, for real-time operational data checks, in particular, regarding satellite data contamination. Some of such methods are based on monitoring changes in standard deviation (Dahoui et al., 2014), whereas others (Dahoui, 2023) utilise more complex algorithms that combine several ML/AI techniques, such as autoencoders, Long-Short Term Memory (LSTM) deep networks, and classifiers. This approach can lead to good results after training on a specific variable with long observations, but it may also suffer the artefacts of overfitting.

Todling (2009), Todling et al. (2022), Waller et al. (2015), and Waller (2021) reviewed several techniques based on the approach that is called observation-minus-background or observation-minus-forecast (the former used for uncertainty quantification, while the latter one used by climate centres for ensemble forecasts and data assimilation). The essence of the approach is estimation of error covariance, which then can be used for analysis of observational deviations (which is the topic of the current paper). However, in such methods, several assumptions are applied, such as absence of correlations between observation and background errors, and the analysis errors are expected to be related linearly with observation and background errors. We note that such assumptions are not imposed when applying the technique proposed here.

ML/AI techniques has became popular in other areas of fault analysis, in particular, in engineering applications. These techniques range from the regression-based residual analysis, with information criteria and error estimates for quantification of performance, to recently developed neural-network-based anomaly detection (for example, convolutional autoencoders), isolation forests, and support vector machines (Ciaburro, 2014).

In many of such techniques, it is necessary to train a neural network or fit a model on a historical data with known (labelled) anomalies. When such data is not available, performance on poorly-trained data may be unsatisfactory (80 % or lower).

Yet another approach that has been applied for fault detection is Bayesian network (Yang et al., 2022). The approach provides uncertainty quantification, which is an advantage, but there two major limitations. First, it is most suitable for a multivariate process, which means that the input of a single time series may be not sufficient. Second, Bayesian networks require control limits for anomaly detection, using likelihood indices, which is done using kernel density estimation, and this requires additional choice of parameters (bandwidth values in several functional spaces). We applied a few such techniques to Bingley dataset but the results were not satisfactory (not shown here).

Given the available techniques described above, still, there is a need for a technique that would be sufficiently simple, yet sensitive, and, without supervision or multiple parameter fitting, capable of detecting diverse changes in times series.

In this article, we study humidity records of several decades using tipping point analysis that is capable of detecting long-term natural phenomena, such as climate change, as well as abrupt changes of data patterns caused by technical artefacts. This is the first time that tipping point analysis is deployed for such a purpose, and we demonstrate its usability for detection of instrumental changes. If used in real-time, utilising a small window size for up-to-now available data (thus reducing uncertainty in timing the change), tipping point analysis may help identify drifting of sensors and, in principle, prevent prolonged recording of poor-quality data. If used for historic datasets, a change in the noise characteristics might help identify a change in technology (for example from psychrometer to electronic hygrometer) in cases where this might not otherwise be recorded or detectable. Improving such information can potentially improve the attribution of uncertainty in cases where only a worst-case uncertainty might otherwise be assigned.

2.1 Overview of method

In the work reported here, the technique more conventionally used to identify tipping points of complex systems was applied to several time series of near-surface observations of air temperature and of humidity at UK locations. After potential change points were identified using the analysis, the observation records and their metadata were assessed to investigate whether the identified change points corresponded to significant events such as the change of an instrument or of a recording technique.

2.2 Tipping Point Analysis

After Poincaré's pioneering work on bifurcation theory (Poincare, 1892), in the 1960s and 1970s an intuitive idea of a bifurcation appeared in social sciences as the term “tipping point” coined by sociologists. Malcolm Gladwell published a bestselling book on tipping points (Gladwell, 2000), where he expanded the approach to biophysical systems. In 2008, Lenton et al. (2008) published the seminal paper on tipping elements in the Earth system (Lenton et al., 2008), in which the main geophysical tipping elements were described. Lenton's work gave an onset to multiple publications in geophysics and paleoclimate, focussing on early-warning signals (EWS) of tipping points (Livina, 2023). Applications of tipping point analysis have been found in geophysics (Lenton et al., 2008; Livina et al., 2011, 2010, 2013), statistical physics (Vaz Martins et al., 2010), ecology (Dakos et al., 2012), structure health monitoring (Livina et al., 2014) and failure dynamics in semiconductors (Livina et al., 2020). Most of these studies were focussed on long-range changes in dynamical systems, such as climate change, or on engineering phenomena, such as failures of devices or installations. In this paper, we return to geophysical applications, but with the purpose of studying sensor conditions, which bridges the gap between such applications and broadens the scope of tipping point analysis. We also supplement the analysis with a Bayesian estimate of significance of changes, which we illustrate on humidity data.

We propose to use the tipping observed in fluctuations (changes in the patterns of a dataset noise, which are not necessarily in the form of a geophysical tipping point) to identify changes in the patterns of the instrumental data, attributable to changes of the instrument or the observing technique.

The basis of the EWS in tipping point analysis is monitoring changes of patterns in fluctuations using approximating stochastic models. Such models are powerful yet simple tools for modelling time series of real-world dynamical systems. Given a one-dimensional trajectory of a dynamical system (the recorded time series), the system dynamics can be modelled by the stochastic equation with state variable z and time t:

where is the time derivative of the system variable z(t), and D and S are deterministic and stochastic components, respectively. Component D(z,t) may be stationary or dynamically changing (for instance, containing long-term or periodic trends or both).

The tipping-point analysis consists of the following three stages: (1) anticipating (pre-tipping, or EWS detection/analysis), (2) detecting (tipping), and (3) forecasting (post-tipping). For the purpose of the current analysis, we use only the first stage of the tipping point analysis: anticipation, or early-warning signal.

Anticipating tipping points (pre-tipping) is based on the effect of critical slowing down of the dynamics of the system prior to critical behaviour, i.e., increasing return time to equilibrium (Wissel, 1984). When a system state becomes unstable and starts a transition to another state, the response to small perturbations becomes slower. This “critical slowing down” can be detected as increasing autocorrelations (ACF) in the time series (Held and Kleinen, 2004). Alternatively, the short-range scaling exponent of Detrended Fluctuation Analysis (DFA) (Peng et al., 1994) may be monitored (Livina and Lenton, 2007). The lag-1 autocorrelation (Brockwell and Davis, 2016) is the value of the autocorrelation function at lag = 1, the autocorrelation function being the average dependence between elements of time series at various steps, or lags. Lag-1 ACF is calculated in sliding windows of fixed length (conventionally, half of the series length) or variable length (for uncertainty estimation) along the time series, which produces a time series of an early-warning indicator. This indicator describes the structural dynamics of the time series. If the time series of the indicator remains flat and stable, the time series does not undergo a critical change (whether bifurcational or transitional).

If the indicator rises to a critical value of 1 (as one is the maximal value of normalised autocorrelation, provided no detrending or filtering is applied to the input data), this is a signature of early warning signal of critical behaviour. However, when any pre-processing is applied to the input data, this is likely to modify autocorrelation values, and indicator may not reach the maximal value 1. In this case, the important property of the indicator is its monotonic increasing trend. Such a monotonic trend of the EWS indicator can be estimated, for instance, using Kendall rank correlation.

Lag-1 autocorrelation is estimated by fitting an autoregressive process of order 1 (AR1), which is a common modelling tool in time series analysis:

where ηt is a Gaussian white noise process of unit variance, σ is the noise level as a standard deviation, and is the “ACF-indicator” with κ the decay rate of perturbations. Then, c→1 as κ→0 when a tipping point is approached. In addition, the DFA method utilises built-in detrending of a chosen polynomial order, which allows transitions and bifurcations to be distinguished in the EWS. These features can be identified by comparing several early-warning indicators, with and without detrending data in sliding windows (Livina et al., 2012). The paper of Livina and Lenton (2007) provided the first application of the DFA-based early-warning indicator to the paleotemperature record with detected transition using both ACF and DFA indicators.

Detection of a tipping point is performed using dynamical potential analysis. The technique detects a bifurcation in a time series and the time when it happens, which is illustrated in a potential plot mapping by colour the potential dynamics of the system (Livina et al., 2011, 2010). The technique of potential forecasting is based on dynamical propagation of the probability density function of the time series (Livina et al., 2013). In the current paper, we do not perform detection and forecasting stages of tipping point analysis and focus on anticipating tipping only EWS. Detection technique, which focuses on the number of system states, describes only a subset of the possible changes (genuined bifurcations), and this would be a limitation in the current study.

The theory of tipping point analysis is generic, and here it is useful for detection, for example, of such cases where a hygrometer progressively under-reads (locally indistinguishable from true value), then a site check establishes that the instrument is out of specification, and it is replaced with a new calibrated hygrometer. In data, such a change would look like a local step-change in the data that follows some drifting trend (this trend may have provided a short EWS). Another case could be that a wet- and dry-bulb hygrometer read a few times per day is replaced with an electronic sensor (that reads at the same temporal intervals), which is then replaced by an automated reading protocol logged at 1 min intervals (these datasets might have different effective resolution, or different error (noise) characteristics). Such a change of sampling rate would immediately produce stronger auto-correlations in the data, which would be detectable using the lag-1 autocorrelation EWS technique. We are also interested to detect more complex instrument changes, such as station/instrument moves, instrument drifts, and various local environment changes.

2.3 Humidity measurements

Following Willett et al. (2014), calculation of relative humidity in this work is based on several input and derived variables as follows:

-

Vapour pressure with respect to water (e) in hectopascals

-

Vapour pressure with respect to ice (eice) in hectopascals

-

Station pressure (Pmst) in hectopascals

-

Relative humidity (RH) in percent relative humidity

where T is station climatological monthly mean, Td is dew-point temperature (in Kelvins), Pmsl is pressure at mean sea level, Z is height in metres, es is saturated vapour pressure.

We consider humidity observations in the UK that span several decades from the Met Office Hadley Centre's Integrated Surface Dataset (HadISD), see Dunn et al. (2012, 2016), and Dunn (2019). This is a global hourly land surface dataset of core meteorological variables originally archived by NOAA's National Centers for Environmental Information (NCEI) as its Integrated Surface Dataset (ISD), see Smith et al. (2011). The HadISD data have been quality controlled to remove random errors, with some station merging and duplicate removal to create long-term records.

Initially, datasets of 56 stations were provided, many of them with large gaps. After removing data before the largest gap in each of them, 55 stations were selected (the 56th being too short after truncation). We have removed large gaps, i.e., intervals with absent data – these contain no statistics and it is not clear how to use them for EWS analysis. Filling large gaps with interpolated data would affect autocorrelations and thus introduce undesirable bias in early-warning indicators, which would distort searches for instrumental changes in the data.

One of the motivations for this study was that in many cases of long-term climatological observations, metadata might be missing or difficult to obtain (some documentation may still be not digitalised).

While there were station-level measurements of pressure (variable “stnlp”), these records were often short or patchy and the data quality was quite poor. Instead of these station pressure measurements, we used a climatological surface pressure from the nearest gridbox of the ECMWF ERA5 (Reanalysis v5) product.

Using the HadISD dewpoint & temperature variables and pressure variables from the ECMWF ERA5 for the considered area (Hersbach et al., 2020) (the reanalysis pressure variable, after necessary temporal interpolation, being an acceptable approximation for the purposes of our data processing), we obtained the relative humidity using Eqs. (3a)–(3f).

Dewpoint and relative humidity datasets were pre-processed as follows:

-

Where large gaps were observed, the initial part of the data that included the gap was removed

-

Global trend and seasonality were removed using SSA (singular spectrum analysis) (Broomhead and King, 1986) from both dewpoint and relative humidity variables. SSA decomposes the input time series into a sum of components (low-frequency trend, seasonal modulations, and detrended noise). The method is based on the singular value decomposition of a special matrix constructed from the time series. The advantage of the method is that neither a parametric model nor stationarity conditions are to be assumed for the time series. This makes SSA a versatile tool of time series analysis. Detrending using SSA can affect the level of autocorrelations but not the trend in the indicator time series. EWS is estimated as monotonous positive trend, and this trend is present in both indicators of the raw and detrended data.

-

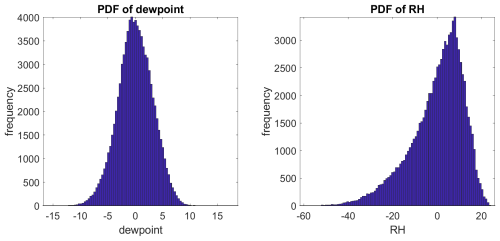

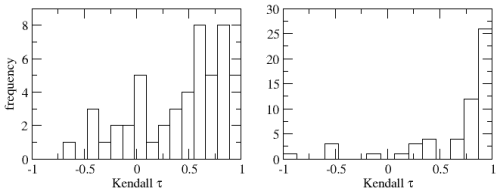

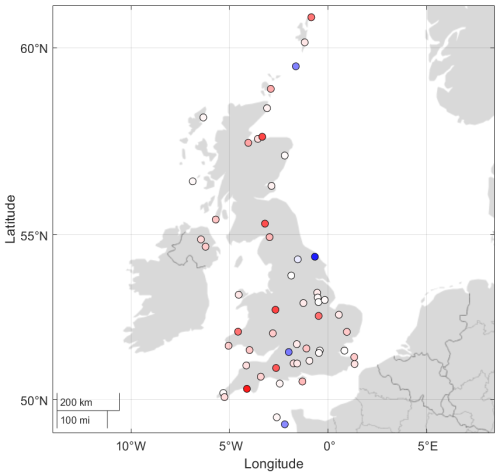

Detrended fluctuations of dewpoint and relative humidity were analysed using lag-1 ACF for EWS for the purpose of detecting instrumental changes. The strength of the EWS trend was quantified using the Mann-Kendall coefficient for assessment of monotonous trend (ideally increasing trend gives Kendall value 1, ideally decreasing −1). For the considered 55 stations, averaged Kendall values of dewpoint detrended fluctuations are 0.30±0.54, whereas for relative humidity detrended fluctuations, the Kendall values are 0.68±0.44 (mean and standard error). This is summarised in Fig. 1.

Figure 1Histograms of Mann-Kendall coefficients of monotonic trends in early-warning indicators for dewpoint (left panel) and relative humidity (right panel). The window size for calculation of indicators was 10 % of time series length (as the records had different lengths). High positive values of the coefficient denote significant increasing trend indicating EWS.

Pre-processing of data may influence autocorrelations: when a smoothing filter is applied, correlations increase, which may lead to very high level of fluctuations of autocorrelation function close to critical value 1. Such EWS values are usually not informative, and detection of sensor changes would be unfeasible. However, in our paper we apply detrending, which acts in the opposite way to smoothening: detrending removes the low-frequency trend, and autocorrelations diminish.

Despite these modifications, the main object of interest is the monotonic trend in the EWS indicator, which denotes the change in the dynamics of fluctuations. The absolute values of the EWS indicator are less important in this context.

Significant negative trends in both variables were observed in datasets for the locations of Leeming, Fylingdales, Lyneham, Jersey. Such effects in early-warning indicators may denote stabilisation (opposite to a critical transition), which may be, for example, due to urban effects on the instruments, such as loss of shrubs and building up of green areas.

Autocorrelations were analysed using a short single window. Conventionally, a much larger window size is used for tipping point analysis, as the phenomenon of interest in geophysics is usually of longer scale (decades), often related to climate change. In this case, we need to consider short-term events (replacement of an instrument or its drift/calibration, station moves, local environment changes), and a short window with a smaller subset of data for averaging provide higher sensitivity for the purpose of our analysis. The size of the sliding window should be selected in such a way that high-frequency fluctuations (which produce maximal lag-1 autocorrelations) would be smoothed out, whereas intrinsic EWS would be detected (usually increasing in the interval [0.7,1] – see the examples in the Appendix). The choice of window can be explored and furthermore automated using statistical techniques (such as scaling analysis with long-term exponents), because each geophysical variable has signature scaling behaviour, and therefore need to be investigated individually. In this case study, we note that we have selected a suitably small window size, which produced detectable EWS for the purposes of our analysis. In general, the smaller the window size, the better for studying sensor phenomena, as we want to identify nonstationarities, possibly caused by instrumental artefacts and sometimes reversed short-term transitions (for example, transient extreme events). In principle, it is possible to study the same time series with different windows of early-warning indicators, for different purposes: shortest one to identify instrument replacement, and longer one for studying environmental changes, such as encroachment of town or forest. EWS indicators have sensitivity for detection of short-term trends. However, such an indicator can still contain signatures of longer-term trends, although with noisy patterns. The choice of the window requires balancing of the sampling rate of time series and the dynamics of the phenomenon of interest. For example, for studying climate change effects, which manifest at the scale of several decades, it is necessary to consider an indicator with sufficiently large sliding window; for studying rapid changes in the time series, which may be due to instrument change or sensor deterioration, much smaller window size is necessary. Indicators with large window size usually have smoother patterns due to aggregation and average of large subsets of data.

Spatial distribution of the detected long-term changes in the EWS indicators across the UK for relative humidity is mapped according to the Mann-Kendall coefficient for detection of monotonous trends, which is illustrated in Fig. 2. This plot shows where the long-term changes in humidity are more pronounced (large blue dots), and this can be further analysed in terms of local micro-climates and city/country distributions.

Figure 2Spatial mapping of linear trends in EWS indicators for the detrended fluctuations of relative humidity in the UK. Trends were fitted over the whole range of each indicator. The colour denotes direction of trend (red – positive, blue – negative). The brighter colour of dot is defined by the value of the lead coefficient: darker red for higher positive value, darker blue for lower negative value.

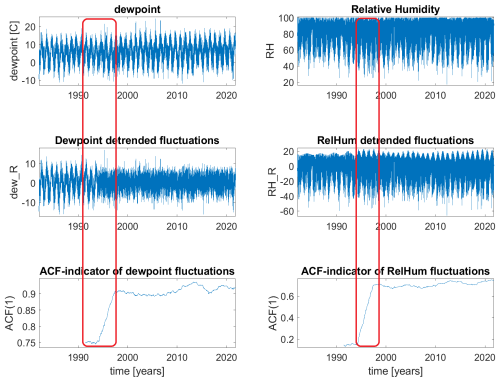

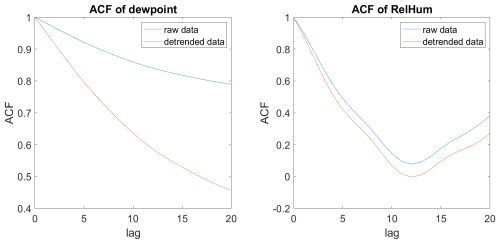

We consider the Bingley station (Midlands countryside) as a representative example for detailed analysis. It is known that there was a period of instrumental changes in environmental sensors. For example, in the 1980s–1990s there were replacements of the earlier analogue instruments with modern digital, which can be detected using ACF-based indicators of the tipping point analysis. Figure 3 illustrates at a glance such a clear change. It is interesting that the probability density functions of dew point and RH have different shapes (skewness), probably due to the nonlinearity of the equations linking them, as can be seen in Fig. 4. Autocorrelation functions differ, too, which reflects memory in the data (i.e., internal dependencies between the points of time series), as can be seen in Fig. 5.

Figure 3Bingley dew point and relative humidity (upper panels), their detrended fluctuations (middle panels) and EWS indicators calculated with 10 % sliding windows. The red boxes denote the intervals of transitions: in the input time series the changes are not visible, whereas in the detrended series one can notice the change of pattern, which is then clearly detected by the EWS indicator – the likely instrumental change in 1994.

Figure 5Autocorrelation functions of the Bingley dew point and relative humidity, with and without detrending.

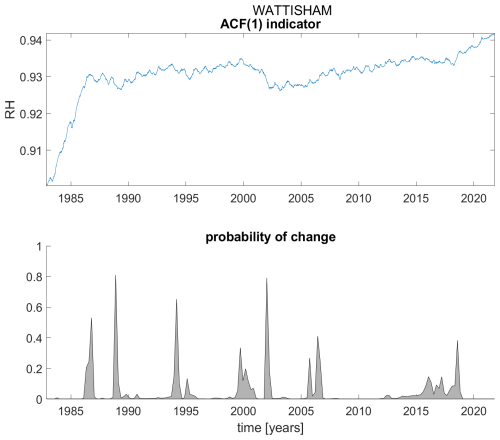

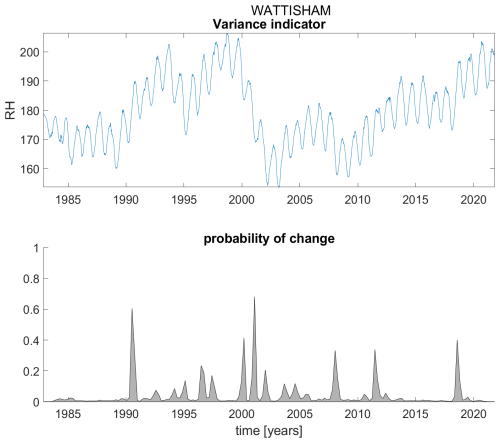

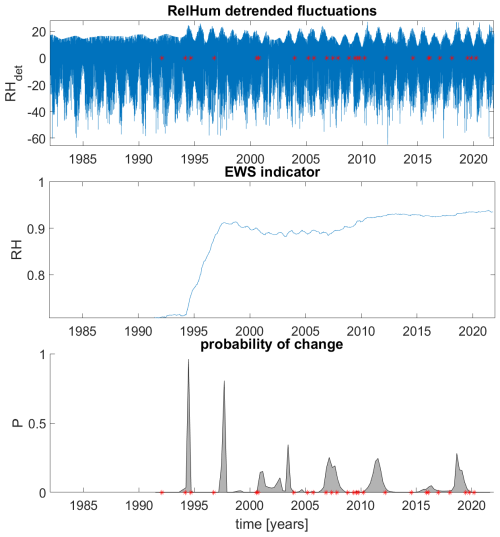

To perform automatic identification of instrumental changes, one can use probabilistic estimation of change points in the EWS indicators. By applying the Matlab package “Bayesian Changepoint Detection & Time Series Decomposition” (Zhao et al., 2019), we identify change points in EWS indicators, specifically those of statistical significance. As can be seen in the following plots, the changes in the indicators that are statistically significant can be identified in the probabilities of the changes based on the Bayesian ensembles with Monte Carlo simulations (Zhao et al., 2019). The considered variable was detrended relative humidity, for which the ACF-indicator was calculated, which then was analysed using the Bayesian model ensemble. The changes with statistically significant peaks (probability > 0.8) are narrow, and the timing of change events can be identified with small uncertainty of several weeks, if necessary.

Because the series vary in length, while we are using the window length of 10 % of the series length, the sliding window aggregates data of different length in each case. They are comparable but vary from series to series. Furthermore, there is an uncertainty due to aggregation of data within a selected window. This uncertainty can be reduced by using smaller and smaller window, if there is a task of precise timing of the change. In this paper, we aim to demonstrate that such detection is possible in principle, and in further work we can consider the problem of timing with reduced uncertainties.

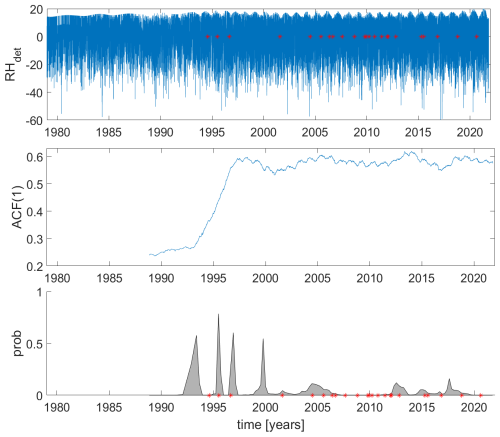

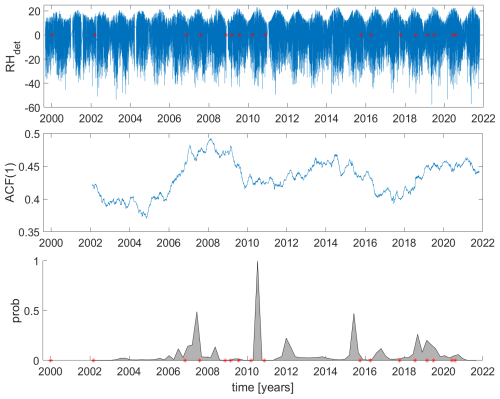

In the case of Bingley, there are two statistically significant changes, in 1994 and in 1998, which are indicated in the lower panel with probabilities of changes, see Fig. 6. The red dots in the upper panels denote the recorded changes of operations (this data was provided by Met Office for verification), for which the major changes from manual to automatic were detected by the abrupt increases in indicators. The introduced method of instrumental recording changed the patterns of autocorrelations, and this is detectable by the EWS indicators. In the later period, short intermittencies did not affect the indicators as much as the major change of the technology in the 1990s, yet many of them can be seen in the probabilistic detections in the bottom panel of Fig. 6.

Figure 6Bingley detrended (det) fluctuations of relative humidity, its ACF-indicator with a small window, which is suitable for detection of instrumental changes with change of fluctuation patterns (middle panel), and Bayesian analysis of the indicator time series, which denotes probabilities of changes (lower panel). Red dots in the lower and upper panels denote the recorded changes of operations.

In further discussions of the known instrument changes, it was mentioned that in Bingley, in 1994 and 1998, there were changes from manual to automatic, as well as a change of the local airfield, which is an excellent confirmation of the detected abrupt transition. Moreover, the smaller detections in the years 2007, 2011, 2018 were reported to be related to routine replacements of sensors at Bingley. This demonstrates the capability of these techniques in sensitive detection of instrumental change.

For comparison, we plot equivalent results for stations Camborne (Fig. 7) and Carlisle (Fig. 8). We note that both of these analyses show strong detections of changes in the 1990s.

Figure 7Camborne detrended (det) fluctuations of relative humidity, its ACF-indicator with a small window, which is suitable for detection of instrumental changes with change of fluctuation patterns (middle panel), and Bayesian analysis of the indicator time series, which denotes probabilities of changes (lower panel). Red dots in the lower and upper panels denote the recorded changes of operations.

Figure 8Carlisle detrended (det) fluctuations of relative humidity, its ACF-indicator with a small window, which is suitable for detection of instrumental changes with change of fluctuation patterns (middle panel), and Bayesian analysis of the indicator time series, which denotes probabilities of changes (lower panel). Red dots in the lower and upper panels denote the recorded changes of operations.

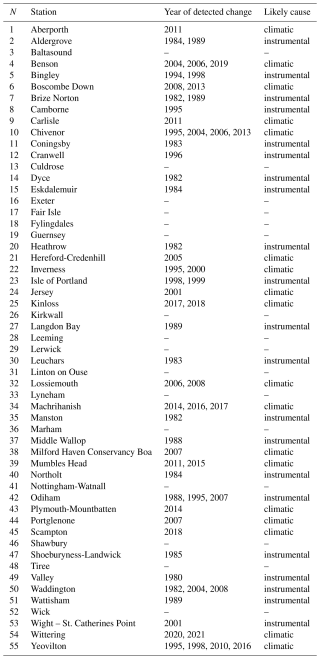

The results of the analysis of the changes in the indicators for relative humidity for the large set of 55 observing stations are summarised in the Table A1 in the Appendix. Abrupt changes in EWS indicators are likely to be instrumental, whereas gradual ones are likely to be climatic (either natural, such as long-term climate change, or anthropogenic, such as urbanisation and change of local environment). The reported detections were later confirmed by available records of instrumental changes for some of the stations.

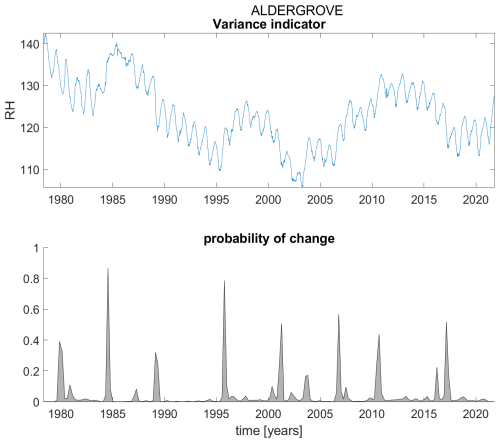

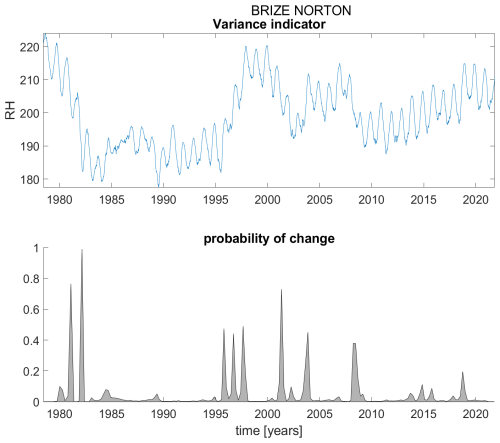

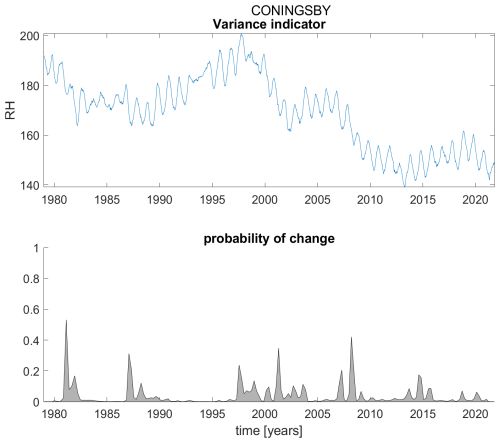

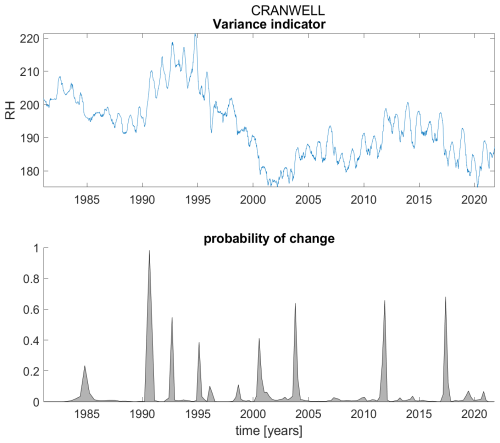

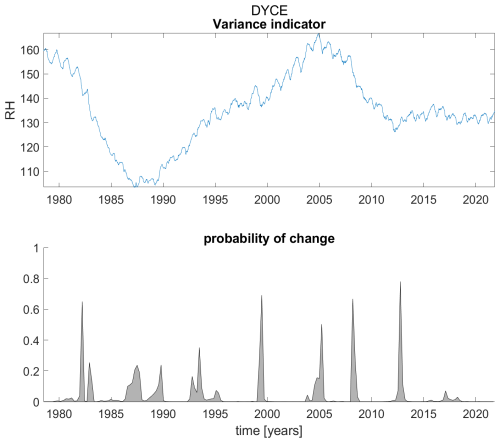

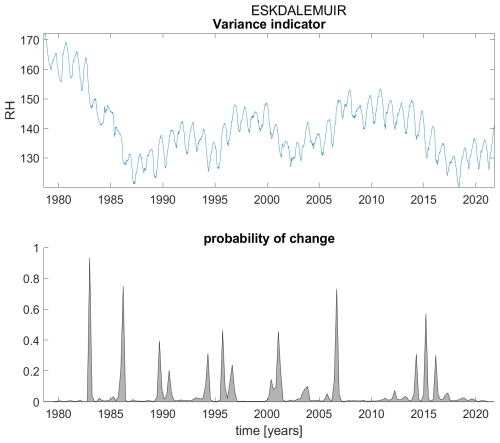

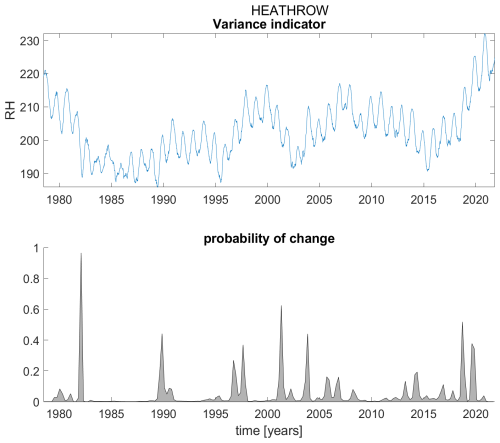

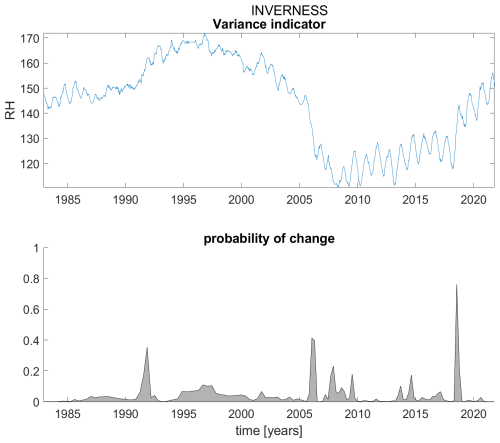

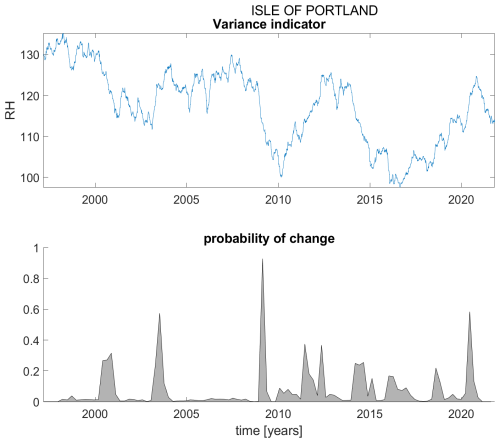

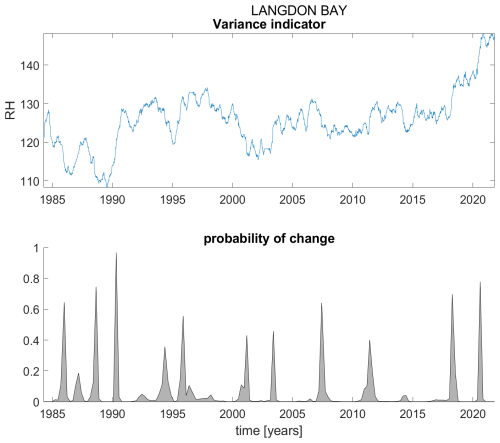

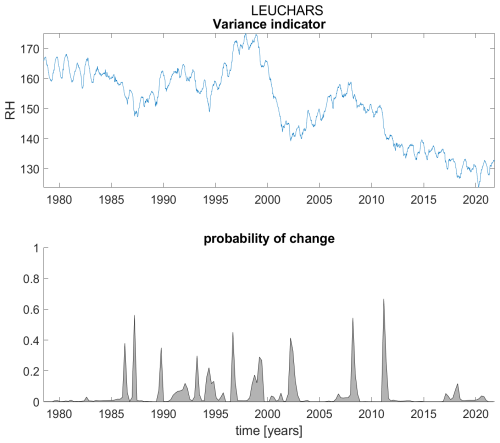

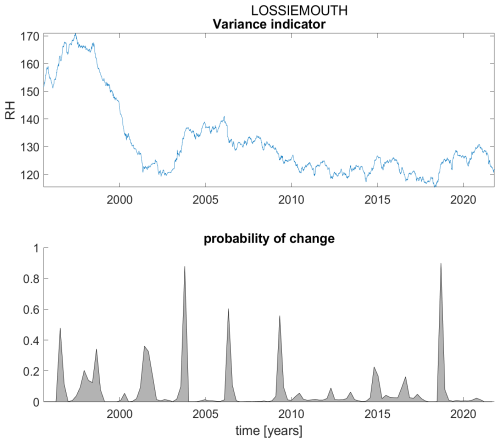

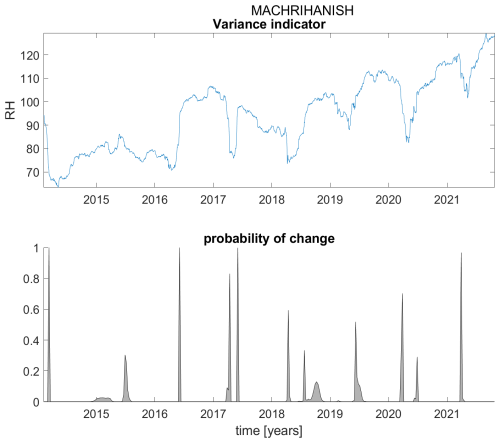

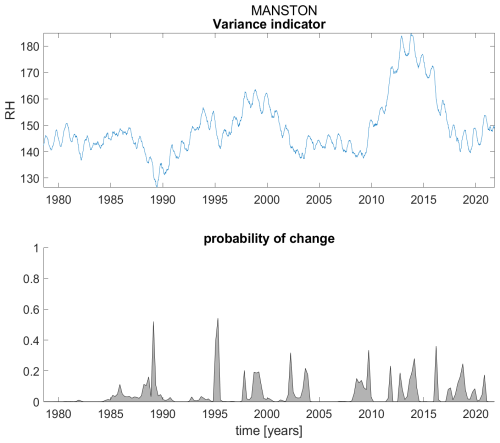

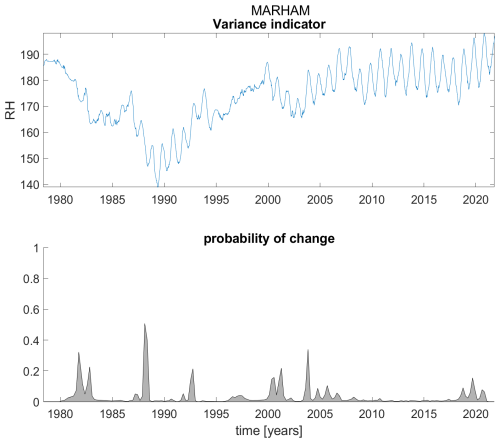

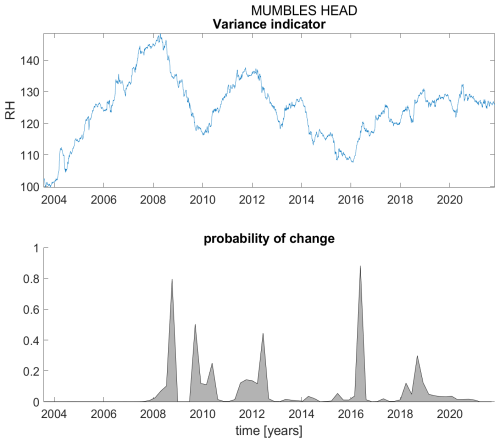

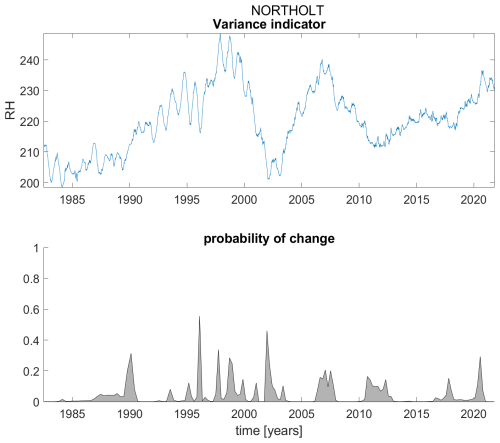

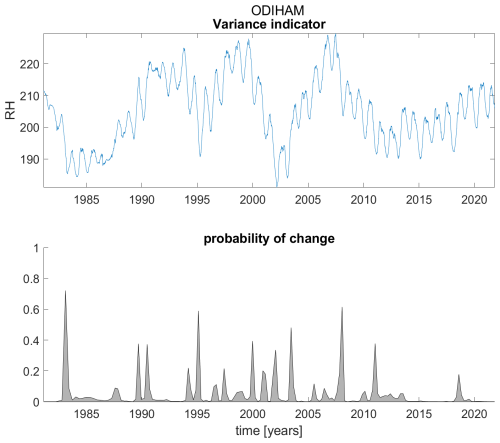

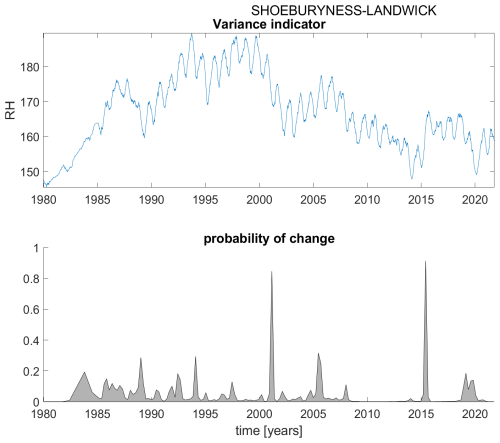

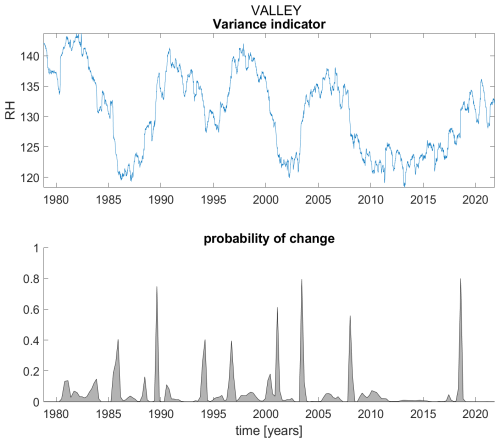

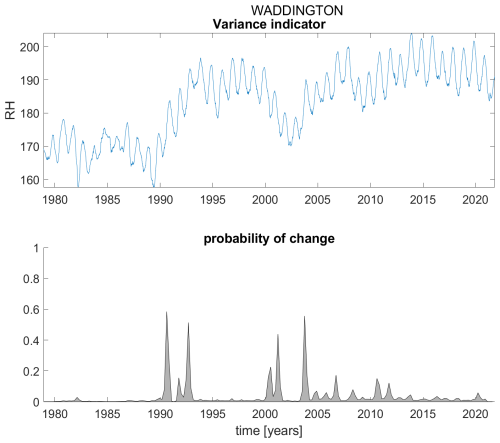

To complement the analysis based on autocorrelations, we also demonstrate early warning signals based on variance (see Figs. B1–B22 in the Appendix). Smith et al. (2023) previously discussed changes in measurement noise and demonstrated that they may lead to anti-correlation between AR1 and variance indicators. This confirms the earlier observations by Livina et al. (2012) that increasing correlations in the data may lead to increase of autocorrelations and simultaneous decrease of variance. In particular, this is due to the final-size effect of the observational sliding window used for calculation of each indicator. In our current analysis, the variance indicator provided noisier results with less clear changes than autocorrelations, and therefore the autocorrelation indicator seems more suitable for the purpose of instrumental change detection.

We have applied tipping point analysis in a novel way (compared with its conventional use) to study instrumental and sensor phenomena in a large UK dataset of relative humidity. We carefully preprocessed the data to ensure that no underlying trends would affect the results, and as a consequence we have been able to reveal signatures of sensor events and phenomena.

In many of the stations, when applying a small sliding window, various transitions were observed. In particular, in the mid-1980s and mid-1990s (see figures in the main text and in the Appendix), the tipping point analysis identified several rapid transitions, which are likely to have been caused by instrumental artefacts, based on the verification from the station records. In other cases, transitions are gradual, and often reversed later. Such reversal changes may be related to local stabilisation of environmental conditions.

We did not attempt to distinguish different types of sensor issues. Rather, we wanted to demonstrate that general detection is possible, which can then guide further investigation of such issues.

In this paper, we analysed dewpoint and relative humidity In some cases, relative humidity is measured directly, but in the dataset we use it has been calculated using convertion to dew point temperature, with several stages of processing. We identified short-term instrumental effects in the data using early-warning indicators of the tipping point analysis. These techniques are useful in identifying instrumental changes in those cases, when documentation and historical metadata may be missing. This demonstration of the application of the early-warning signal techniques is new and supplementary to their conventional use of tipping point analysis in climatology and geophysics.

We have demonstrated that autocorrelations are both sensitive and robust in detection of known sensor changes. While not all metadata of measurement circumstances may be available, autocorrelation indicators provide a tool for scanning datasets for such changes, whose rapid development may help distinghuish them from signals generated by slower processes, such as climate change. This dynamics should be taken into account in development of predictive techniques based on EWS signatures.

Our approach has the advantage of detecting changes that are not necessarily related to standard deviation, as many such changes are related to autocorrelations only. The method is computationally light and does not require extensive model training with tuning of multiple parameters.

The observed effects are not climatic tippings, but rather an example of short-term critical transitions caused by instrumental modifications and/or sensor artefacts. Such changes are important to identify, and our approach allows their automation and, if necessary, in real-time. This means that EWS techniques could be used for condition monitoring of environmental sensor networks. This is a promising novel application of the tipping point analysis in a new domain.

We summarise the analysis in Table A1 and provide further illustrations from several other stations.

Table A1Detection of changes in the UK relative humidity records based on the detections probability above 0.8 using the Bayesian technique.

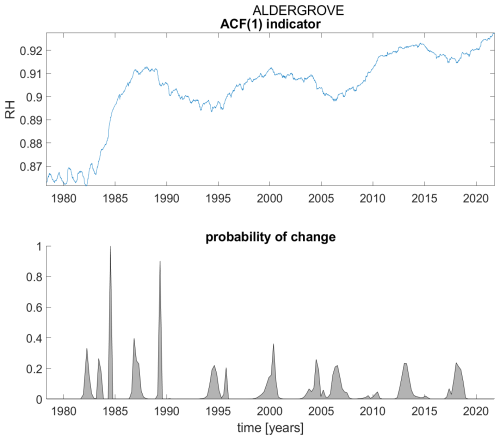

Figure A1ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Aldergrove.

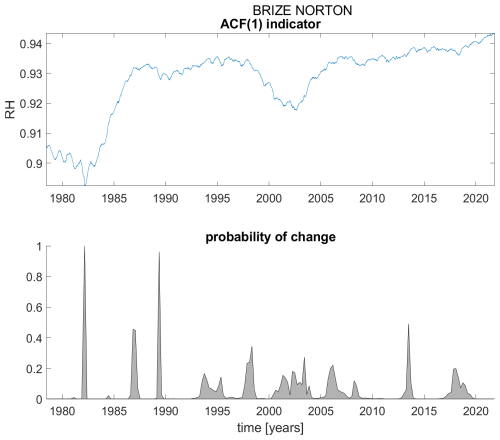

Figure A2ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Brize Norton.

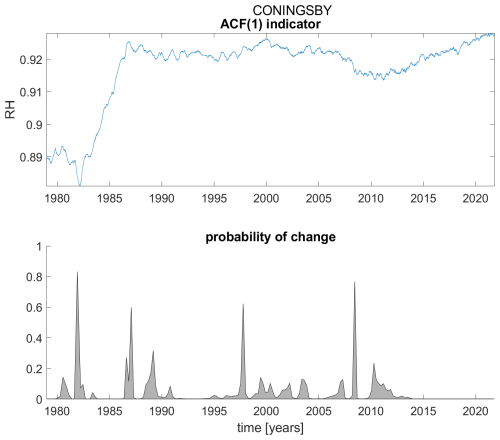

Figure A3ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Coningsby.

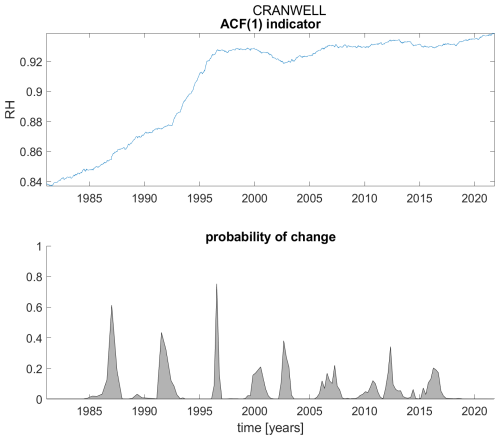

Figure A4ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Cranwell.

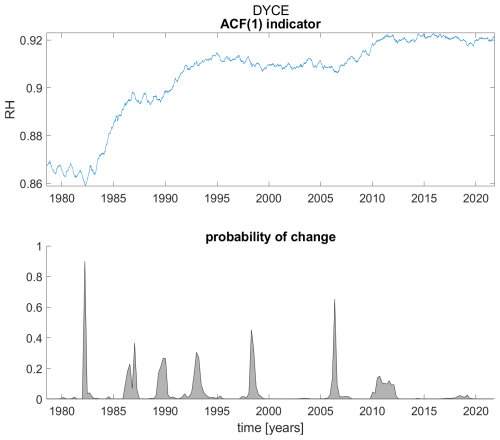

Figure A5ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Dyce.

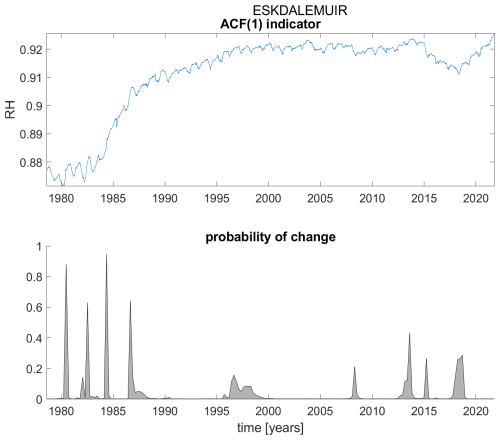

Figure A6ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Eskdalemuir.

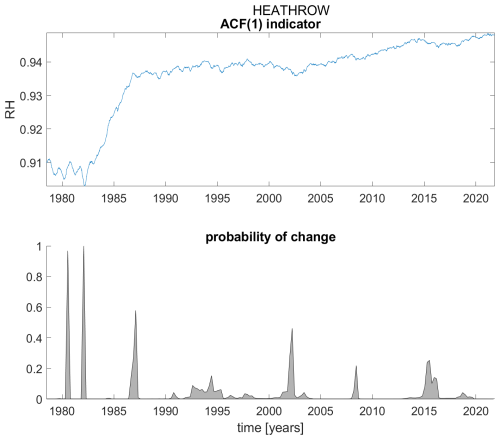

Figure A7ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Heathrow.

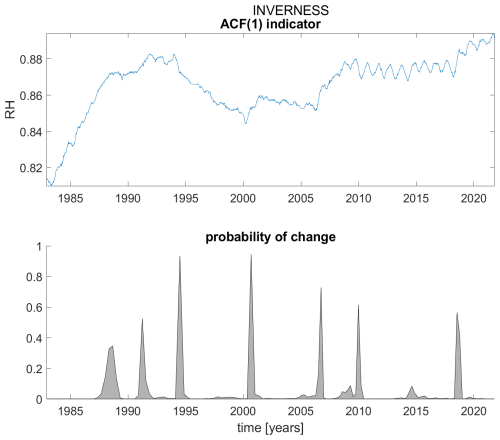

Figure A8ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Inverness.

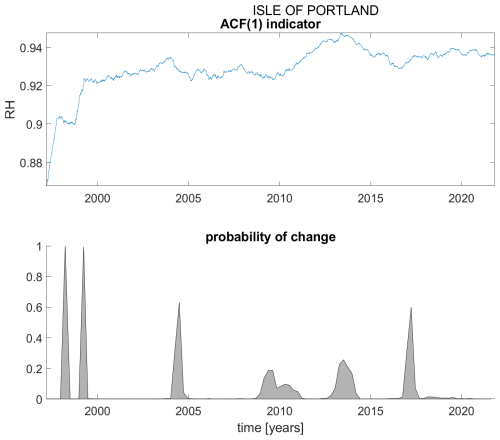

Figure A9ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Isle of Portland.

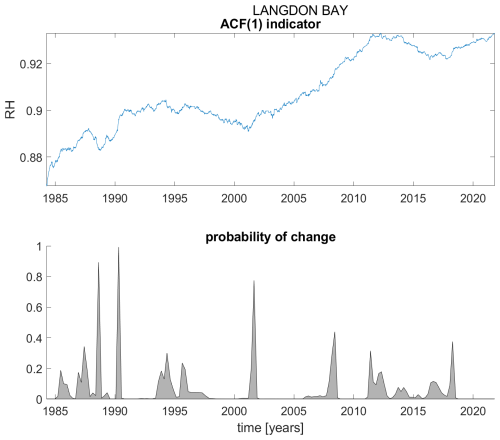

Figure A10ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Langdon Bay.

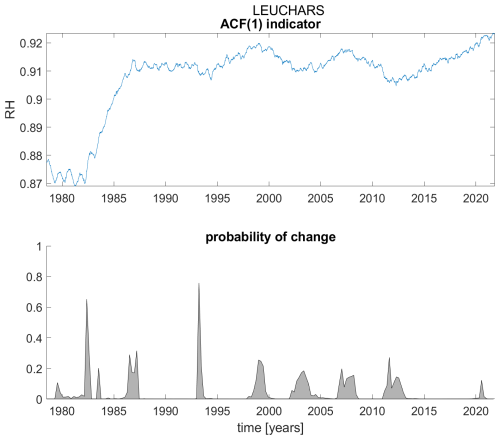

Figure A11ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Leuchars.

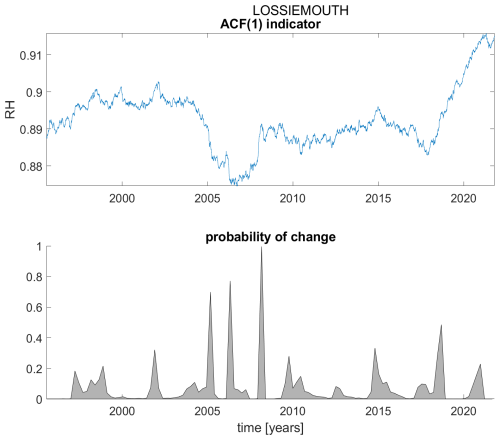

Figure A12ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Lossiemouth.

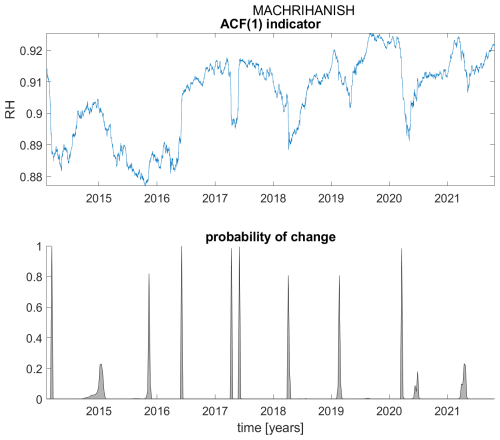

Figure A13ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Machrihanish.

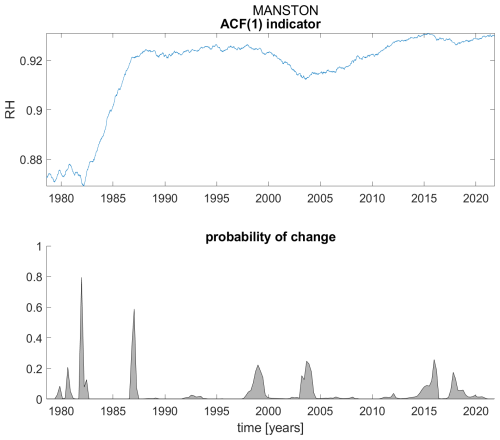

Figure A14ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Manston.

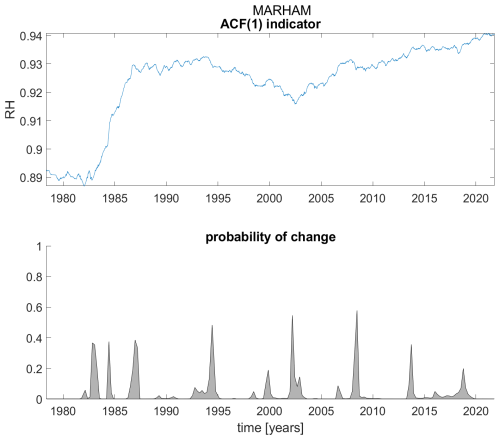

Figure A15ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Marham.

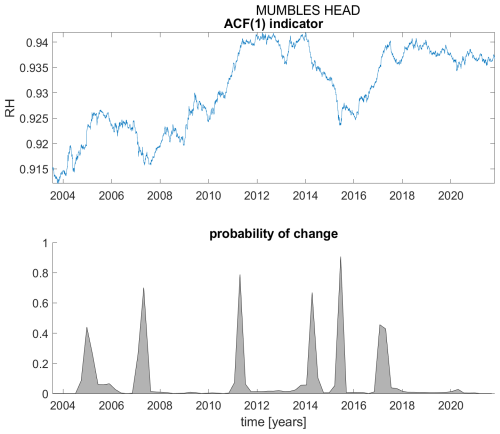

Figure A16ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Mumbles Head.

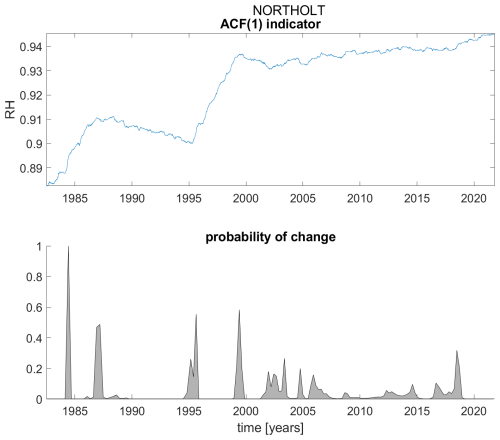

Figure A17ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Northolt.

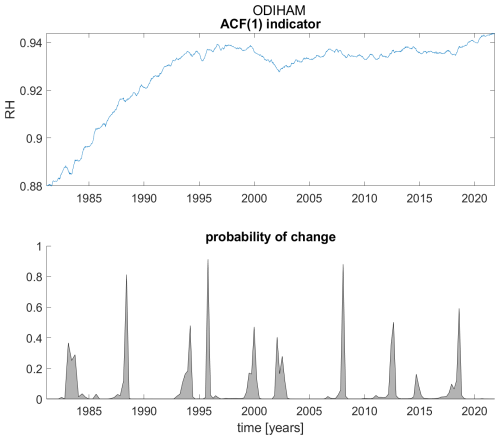

Figure A18ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Odiham.

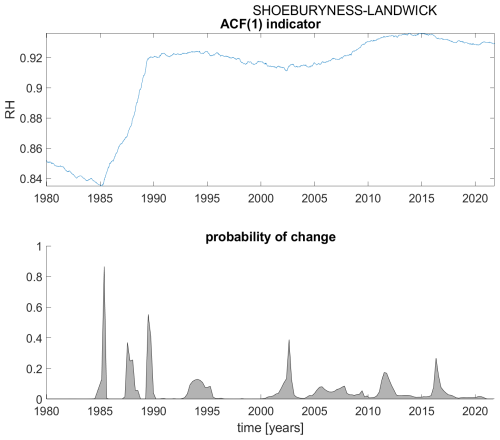

Figure A19ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Shoeburyness-Landwick.

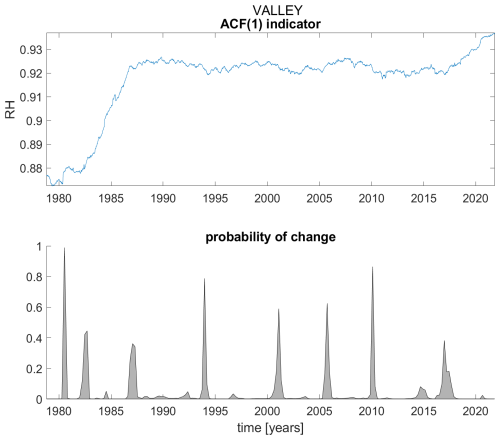

Figure A20ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Valley.

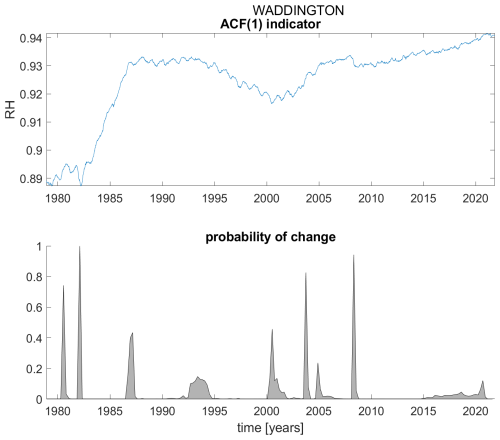

Figure A21ACF(1) indicator (upper panel) and probability of detection of changes in the ACF(1) indicator (lower panel) for station Waddington.

Figure B1Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Aldergrove.

Figure B2Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Brize Norton.

Figure B3Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Coningsby.

Figure B4Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Cranwell.

Figure B5Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Dyce.

Figure B6Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Eskdalemuir.

Figure B7Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Heathrow.

Figure B8Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Inverness.

Figure B9Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Isle of Portland.

Figure B10Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Langdon Bay.

Figure B11Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Leuchars.

Figure B12Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Lossiemouth.

Figure B13Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Machrihanish.

Figure B14Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Manston.

Figure B15Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Marham.

Figure B16Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Mumbles Head.

Figure B17Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Northolt.

Figure B18Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Odiham.

Figure B19Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Shoeburyness-Landwick.

Figure B20Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Valley.

Figure B21Variance indicator (upper panel) and probability of detection of changes in the variance indicator (lower panel) for station Waddington.

The code is based on the autocorrelation function that is available in any programming language. The data is property of Met Office UK.

SB proposed the idea for the study. KW sourced and analysed the meteorological data. VL carried out the tipping point analysis, drafted the manuscript and addressed the reviewer comments. KW and SB reviewed and edited the manuscript.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors. Views expressed in the text are those of the authors and do not necessarily reflect the views of the publisher.

This work was funded by the UK Government's Department for Science, Innovation & Technology (DSIT) through the UK's National Measurement System programmes. Kate Willett was supported by the Met Office Hadley Centre Climate Programme funded by DSIT. We would like to thank the reviewers for their comments that helped improve the manuscript.

This work was funded by the UK Government's Department for Science, Innovation and Technology through the UK's National Measurement System programmes.

This paper was edited by Daniel Kastinen and reviewed by Chris Boulton and one anonymous referee.

Brockwell, P. and Davis, R.: Introduction to Time Series and Forecasting, Springer Texts in Statistics, https://doi.org/10.1007/978-3-319-29854-2, 2016. a

Broomhead, D. and King, G.: On the Qualitative Analysis of Experimental Dynamical Systems. In book: Nonlinear Phenomena and Chaos, Malvern Physics Series, edited by: Pike, E. and Sarkar, S., Adam Hilger Ltd, Bristol, ISBN 10 0852744943, 1986. a

Brugnara, Y., McCarthy, M., Willett, K., and Rayner, N.: Homogenization of daily temperature and humidity series in the UK, International Journal of Climatology, 43, 1693–1709, https://doi.org/10.1002/joc.7941, 2023. a, b

Ciaburro, G.: Machine fault detection methods based on machine learning algorithms: A review, Mathematical Biosciences and Engineering, 19, 11453–11490, https://doi.org/10.3934/mbe.2022534, 2022. a

Dahoui, M.: Use of machine learning for the detection and classification of observation anomalies, ECMWF Newsletter No. 174, 23–27, Winter 2022/23, https://doi.org/10.21957/n64md0xa5d, 2023. a, b

Dahoui, M., Bormann, N., and Isaksen, L.: Automatic checking of observations at ECMWF, ECMWF Newsletter No. 140, 21–24, https://doi.org/10.21957/kuwqjp5y, 2014. a, b

Dahoui, M., Isaksen, L., and Radnoti, G.: Assessing the impact of observations using observation-minus-forecast residuals, ECMWF Newsletter No. 152, 27–31, https://doi.org/10.21957/51j3sa, 2017. a

Dahoui, M., Bormann, N., Isaksen, L., and McNally, T.: Recent developments in the automatic checking of Earth system observations, ECMWF Newsletter No. 162, 27–31, Winter 2019/20, https://doi.org/10.21957/9tys2md61a, 2020. a

Dakos, V., Carpenter, S., Brock, W., Ellison, A., Guttal, V., Ives, A., Kefi, S., Livina, V., Seekell, D., van Nes, E., and Scheffer, M.: Methods for Detecting Early Warnings of Critical Transitions in Time Series Illustrated Using Simulated Ecological Data, PLoS ONE, 7, e41010, https://doi.org/10.1371/journal.pone.0041010, 2012. a

Domonkos, P., Guijarro, J. A., Venema, V., Brunet, M., and Sigro, J.: Efficiency of Time Series Homogenization: Method Comparison with 12 Monthly Temperature Test Datasets, J. Climate, 34, 2877–2891, https://doi.org/10.1175/JCLI-D-20-0611.1, 2021. a

Dunn, R.: HadISD version 3: monthly updates, Hadley Centre Technical Note, N103, https://library.metoffice.gov.uk/Portal/DownloadImageFile.ashx?objectId=1110&ownerType=0&ownerId=635758 (last access: 17 November 2025), 2019. a

Dunn, R. J. H., Willett, K. M., Thorne, P. W., Woolley, E. V., Durre, I., Dai, A., Parker, D. E., and Vose, R. S.: HadISD: a quality-controlled global synoptic report database for selected variables at long-term stations from 1973–2011, Clim. Past, 8, 1649–1679, https://doi.org/10.5194/cp-8-1649-2012, 2012. a

Dunn, R. J. H., Willett, K. M., Parker, D. E., and Mitchell, L.: Expanding HadISD: quality-controlled, sub-daily station data from 1931, Geosci. Instrum. Method. Data Syst., 5, 473–491, https://doi.org/10.5194/gi-5-473-2016, 2016. a

Gladwell, M.: The Tipping Point: How Little Things Can Make a Big Difference, Little Brown, ISBN 10 0316346624, 2000. a

Held, H., and Kleinen, T.: Detection of climate system bifurcations by degenerate fingerprinting, Geophys. Res. Lett., 31, L23207, https://doi.org/10.1029/2004GL020972, 2004. a

Hersbach, H., Bell, B., Berrisford, P., Hirahara, S., Horanyi, A., Muñoz-Sabater, J., Nicolas, J., Peubey, C., Radu, R., Schepers, D., Simmons, A., Soci, C., Abdalla, S., Abellan, X., Balsamo, G., Bechtold, P., Biavati, G., Bidlot, J., Bonavita, M., De Chiara, G., Dahlgren, P., Dee, D., Diamantakis, M., Dragani, R., Flemming, J., Forbes, R., Fuentes, M., Geer, A., Haimberger, L., Healy, S., Hogan, R., Holm, E., Janiskova, M., Keeley, S., Laloyaux, P., Lopez, P., Lupu, C., Radnoti, G., de Rosnay, P., Rozum, I., Vamborg, F., Villaume, S., and Thepaut, J.: The ERA5 global reanalysis, Q. J. R. Meteorol. Soc., 146, 1999–2049, https://doi.org/10.1002/qj.3803, 2020. a

Killick, R., Jolliffe, I., and Willett, K.: Benchmarking the performance of homogenization algorithms on synthetic daily temperature data, International Journal of Climatology, 42, 3968–3986, https://doi.org/10.1002/joc.7462, 2022. a

Lenton, T., Held, H., Kriegler, E., Hall, J., Lucht, W., Rahmstorf, S., and Schellnhuber, H.: Tipping elements in the Earth's climate system, Proceedings of the National Academy of Sciences USA, 105, 1786–1793, https://doi.org/10.1073/pnas.0705414105, 2008. a, b, c

Livina, V.: Network analysis: Connected climate tipping elements, Nature Climate Change, 13, 15–16, https://doi.org/10.1038/s41558-022-01573-5, 2023. a

Livina, V. and Lenton, T.: A modified method for detecting incipient bifurcations in a dynamical system, Geophys. Res. Lett. 34, L03712, https://doi.org/10.1029/2006GL028672, 2007. a, b

Livina, V. N., Kwasniok, F., and Lenton, T. M.: Potential analysis reveals changing number of climate states during the last 60 kyr, Clim. Past, 6, 77–82, https://doi.org/10.5194/cp-6-77-2010, 2010. a, b

Livina, V., Kwasniok, F., Lohmann, G., Kantelhardt, J., and Lenton, T.: Changing climate states and stability: from Pliocene to present, Climate Dymamics, 37, 2437–2453, https://doi.org/10.1007/s00382-010-0980-2, 2011. a, b

Livina, V., Ditlevsen, P., and Lenton, T.: An independent test of methods of detecting system states and bifurcations in time-series data, Physica A, 391, 485–496, https://doi.org/10.1016/j.physa.2011.08.025, 2012. a, b

Livina, V., Lohmann, G., Mudelsee, M., and Lenton, T.: Forecasting the underlying potential governing the time series of a dynamical system, Physica A, 392, 3891–3902, https://doi.org/10.1016/j.physa.2013.04.036, 2013. a, b

Livina, V., Barton, E., and Forbes, A.: Tipping point analysis of the NPL footbridge, Journal of Civil Structural Health Monitoring, 4, 91–98, https://doi.org/10.1007/s13349-013-0066-z, 2014. a

Livina, V., Lewis, A., and Wickham, M.: Tipping point analysis of electrical resistance data with early warning signals of failure for predictive maintenance, Journal of Electronic Testing, 36, 569–576, https://doi.org/10.1007/s10836-020-05899-w, 2020. a

Menne, M. and Williams Jr., C.: Homogenization of temperature series via pairwise comparisons, J. Climate, 22, 1700–1717, https://doi.org/10.1175/2008JCLI2263.1, 2009. a

Peng, C., Buldyrev, S., Havlin, S., Simons, M., Stanley, H., and Goldberger, A.: Mosaic organization of DNA nucleotides, Phys. Rev. E, 49, 1685–1689, https://doi.org/10.1103/PhysRevE.49.1685, 1994. a

Peterson, T., Easterling, D., Karl, T., Groisman, P., Nicholls, N., Plummer, N., Torok, S., Auer, I., Boehm, R., Gullett, D., Vincent, L., Heino, R., Tuomenvirta, H., Mestre, O., Szentimrey, T., Salinger, Forland, E., Hanssen-Bauer, I., Alexandersson, H., Jones, P., and Parker, D.: Homogeneity adjustments of in situ atmospheric climate data: a review, Int. J. Climatol., 18, 1493–1517, https://doi.org/10.1002/(SICI)1097-0088(19981115)18:13<1493::AID-JOC329>3.0.CO;2-T, 1998. a

Poincaré, H.: Les Methodes Nouvelles de la Mecanique Celeste, v. 1, Gauthier-Villars, Paris, https://doi.org/10.3931/e-rara-421, 1892. a

Reeves, S., Chen, J., Wang, J., Lund, X., and Lu, Q.: A review and comparison of changepoint detection techniques for climate data, J. Appl. Meteorol. Clim., 46, 900–915, https://doi.org/10.1175/JAM2493.1, 2007. a

Smith, A., Lott, N., and Vose, R.: The Integrated Surface Database: Recent Developments and Partnerships, Bulletin of the American Meteorological Society, 92, 704–708, https://doi.org/10.1175/2011BAMS3015.1, 2011. a

Smith, T., Zotta, R.-M., Boulton, C. A., Lenton, T. M., Dorigo, W., and Boers, N.: Reliability of resilience estimation based on multi-instrument time series, Earth Syst. Dynam., 14, 173–183, https://doi.org/10.5194/esd-14-173-2023, 2023. a

Todling, R.: An approach to assess observation impact based on observation-minus-forecast residuals, ECMWF Workshop on Diagnostics of data assimilation system performance, 15–17 June 2009, https://www.ecmwf.int/sites/default/files/elibrary/2009/76705-approach-assess-observation-impact-based-observation-minus-forecast-residuals-poster_0.pdf (last access: 17 November 2025), 2009. a

Todling, R., Semane, N., Anthes, R., and Healy, S.: The Relationship Between Two Methods for Estimating Uncertainties in Data Assimilation, Quarterly Journal of the Royal Meteorological Society, 148, 2942–2954, https://doi.org/10.1002/qj.4343, 2022. a

Trewin, B.: A daily homogenized temperature data set for Australia, Int. J. Climatol., 33, 1510–1529, https://doi.org/10.1002/joc.3530, 2013. a

Vaz Martins, T., Livina, V. N., Majtey, A., and Toral, R.: Resonance induced by repulsive interactions in a model of globally coupled bistable systems, Phys. Rev. E, 81, 041103, https://doi.org/10.1103/PhysRevE.81.041103, 2010. a

Venema, V. K. C., Mestre, O., Aguilar, E., Auer, I., Guijarro, J. A., Domonkos, P., Vertacnik, G., Szentimrey, T., Stepanek, P., Zahradnicek, P., Viarre, J., Müller-Westermeier, G., Lakatos, M., Williams, C. N., Menne, M. J., Lindau, R., Rasol, D., Rustemeier, E., Kolokythas, K., Marinova, T., Andresen, L., Acquaotta, F., Fratianni, S., Cheval, S., Klancar, M., Brunetti, M., Gruber, C., Prohom Duran, M., Likso, T., Esteban, P., and Brandsma, T.: Benchmarking homogenization algorithms for monthly data, Clim. Past, 8, 89–115, https://doi.org/10.5194/cp-8-89-2012, 2012. a

Waller, J.: Estimating the full observation error covariance matrix, Tackling Technical Challenges in Land Data Assimilation, AIMES Group (Analysis, Integration, and Modelling of the Earth System), 14–16 June 2021, https://aimesproject.org/wp-content/uploads/2021/08/Waller_AIMES_Workshop.pdf (last access: 17 November 2025), 2021. a

Waller, J., Dancea, S., and Nichols, N.: Theoretical insight into diagnosing observation error correlations using observation-minus-background andobservation-minus-analysis statistics, Q. J. R. Meteorol. Soc., 142, 418–431, https://doi.org/10.1002/qj.2661, 2015. a

Willett, K. M., Dunn, R. J. H., Thorne, P. W., Bell, S., de Podesta, M., Parker, D. E., Jones, P. D., and Williams Jr., C. N.: HadISDH land surface multi-variable humidity and temperature record for climate monitoring, Clim. Past, 10, 1983–2006, https://doi.org/10.5194/cp-10-1983-2014, 2014. a

Wissel, C.: A universal law of the characteristic return time near thresholds, Oecologia, 65, 101–107, https://doi.org/10.1007/BF00384470, 1984. a

Yang, W., Reis, M., Borodin, V., Juge, M., and Roussy, A.: An interpretable unsupervised Bayesian network model for fault detection and diagnosis, Control Engineering Practice, 127, 105304, https://doi.org/10.1016/j.conengprac.2022.105304, 2022. a

Zhao, K., Wulder, M., Hu, T., Bright, R., Wu, Q., Qin, H., Li, Y., Toman, E., Mallick, B., Zhang, X., and Brown, M.: Detecting change-point, trend, and seasonality in satellite time series data to track abrupt changes and nonlinear dynamics: A Bayesian ensemble algorithm, Remote Sensing of Environment, 232, 111181, https://doi.org/10.1016/j.rse.2019.04.034, 2019. a, b