the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

GeoAI: a review of artificial intelligence approaches for the interpretation of complex geomatics data

Roberto Pierdicca

Marina Paolanti

Researchers have explored the benefits and applications of modern artificial intelligence (AI) algorithms in different scenarios. For the processing of geomatics data, AI offers overwhelming opportunities. Fundamental questions include how AI can be specifically applied to or must be specifically created for geomatics data. This change is also having a significant impact on geospatial data. The integration of AI approaches in geomatics has developed into the concept of geospatial artificial intelligence (GeoAI), which is a new paradigm for geographic knowledge discovery and beyond. However, little systematic work currently exists on how researchers have applied AI for geospatial domains. Hence, this contribution outlines AI-based techniques for analysing and interpreting complex geomatics data. Our analysis has covered several gaps, for instance defining relationships between AI-based approaches and geomatics data. First, technologies and tools used for data acquisition are outlined, with a particular focus on red–green–blue (RGB) images, thermal images, 3D point clouds, trajectories, and hyperspectral–multispectral images. Then, how AI approaches have been exploited for the interpretation of geomatic data is explained. Finally, a broad set of examples of applications is given, together with the specific method applied. Limitations point towards unexplored areas for future investigations, serving as useful guidelines for future research directions.

Geomatics is a discipline that deals with the automated processing and management of complex 2D or 3D information. It is defined as a multidisciplinary, systemic, and integrated approach that allows collecting, storing, integrating, modelling, and analysing spatially georeferenced data from several sources, with well-defined accuracy characteristics and continuity, in a digital format (Gomarasca, 2010).

Nowadays, the processing of large amounts of data and information in an interdisciplinary and interoperable way relies on a growing variety of tools and data collection methods. The binomial science and technology directly connected to the geomatics disciplines allow the continuous development of techniques for acquiring and representing data. Surveying and representation are closely linked to each other, as shown by the close connection between the disciplines traditionally associated with surveying, such as geodesy, topography, photogrammetry, and remote sensing, and those related to representation, such as cartography (Konecny, 2002).

Geomatics data are acquired by various systems and platforms, generating geospatial and spatiotemporal heterogeneous information; indeed, the acquisition techniques provide different geomatics data, which can be images (RGB, multispectral and hyperspectral as well as thermal), trajectories, and point clouds. To date, existing algorithms for data processing mainly work with manual or semiautomatic approaches, since full automation has not yet achieved greater reliability and accuracy. The resulting metric and georeferenced information are then used, catalogued, administered, displayed, and stored in a geographic information system (GIS) or generic databases. However, after moving into the era of big data, the analysis and practical use of the information contained within this huge amount of data require tailored computational approaches such as machine learning (ML) and deep learning (DL) (LeCun et al., 2015). The attractive feature of AI is its ability to identify relevant patterns within complex, nonlinear data without the need for any a priori mechanistic understanding of the geomatics processes. Today, DL and AI algorithms have been successfully developed and applied in many geomatics applications (Martín-Jiménez et al., 2018; Zhang et al., 2020). According to the type of data collected, different AI methods are proposed for classification, semantic segmentation, or object detection (Hong et al., 2020b).

1.1 Theoretical background, motivation, and research questions

Existing reviews explore particular geomatics data approaches, generally based on ML and DL, to solve a specific issue. Examples of well-structured systematic reviews focused on RGB-D images (Guo et al., 2016; Y. Li et al., 2018; Zhao et al., 2019; Zhu et al., 2017), thermal images (Ali et al., 2020; Dunderdale et al., 2020; Kirimtat and Krejcar, 2018; Vicnesh et al., 2020), point clouds (Guo et al., 2020; Y. Li et al., 2020; Xie et al., 2020; J. Zhang et al., 2019), trajectories (Bian et al., 2018; Yang et al., 2018a; Bian et al., 2019), and hyperspectral and multispectral images (Audebert et al., 2019; Ghamisi et al., 2017; S. Li et al., 2019; Signoroni et al., 2019; Yuan et al., 2021; Zang et al., 2021; Kattenborn et al., 2021) as well as their applications are available in the scientific literature. However, while the scientific literature recognises the importance of geomatics data processing since it covers many fields of application, there is a lack of systematic investigation dealing with AI-based data processing techniques. For geospatial domains, fundamental questions include how AI can be specifically applied to or must be specifically created for geospatial data. (Janowicz et al., 2020) proposed an overview of spatially explicit AI. ML has been a core component of spatial analysis in geomatics for classification, clustering, and prediction. In addition, DL is being integrated with geospatial data to automatically extract useful information from satellite, aerial, or drone imagery (just to mention some) by means of image classification, object detection, semantic, and instance segmentation. The integration of AI, ML, and DL with geomatics is broadly recognised and defined as “geomatics artificial intelligence” (GeoAI).

Considering the latest achievements in data collection and processing (Grilli et al., 2017), geomatics is facing the worldwide challenge of, on one hand, reducing the need for manual intervention for huge datasets and, on the other, improving methods for facilitating their interpretation. GeoAI could represent the turning point for the entire research community, but, to the best of our knowledge, there is currently no survey on this emerging topic.

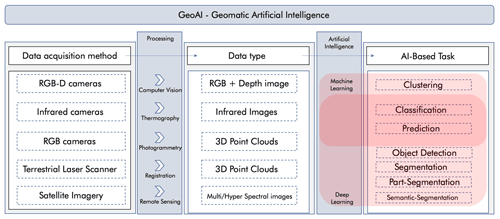

To close this gap, this review aims to provide a technical overview of the advances and opportunities offered by AI for automatically processing and analysing geomatics data. This work emphasises that, despite their specific technical requirements, the computational methods used for these tasks can be integrated within a single workflow to optimise several steps of interpreting complex geomatics data, regardless of the application. Considering the multidisciplinary nature of geomatics data, major efforts have been undertaken in regard to RGB-D images, infrared thermographic (IRT) images, point clouds, trajectory data (TRAJ), and multispectral imaging (MSI) and hyperspectral imaging (HSI). Initially, a literature review was conducted to understand the main data acquisition technologies and if and how AI methods and techniques could help in this field. In the following account, specific attention is given to the state of the art in AI with the selected data type mentioned above. In particular, the techniques and methods for each type of research are analysed, the main paths that most approaches follow are also summarised, and their contributions are indicated. Thereafter, the reviewed approaches are categorised and compared from multiple perspectives, including methodologies, functions, and an analysis of the pros and cons of each category. Each technology and method reported in Fig. 1 will be analysed.

Figure 1Artificial intelligence approaches for the interpretation of complex geomatics data. Conceptualisation of the review process.

In particular, the purposes, issues, and motivations of this study were investigated to set the following research questions (RQs).

- RQ1

To explore the most commonly used methodologies in recent years for dealing with geomatics data, the following question has been set: among the well-established AI methods, which is the most commonly used in geomatics?

- RQ2

To understand if the methodologies used depend on the processed data, the following question arises: do geomatics data influence the choice of using one methodology rather than another?

- RQ3

To provide an overview of the main tasks performed using geomatics data, the following question must be answered: for which tasks are geomatics data used?

- RQ4

To better understand which type of geomatic data is used in different application domains, the following question arises: are there relationships between application domains and geomatics data?

1.2 Paper organisation

To enhance its readability and facilitate reader comprehension, the paper has been structured as follows. Section 1.3 describes the methodology adopted in the choice of the articles identified and selected for the review work. Section 2 presents the related work on the application of AI methods to the geomatics data in the test. Section 3 summarises the concepts, existing techniques, and important applications of GeoAI. Section 4 describes the limitations and implications of this research, highlighting some emerging applications of AI for geomatics data analysis. Finally, Sect. 5 presents the implications of the research and concluding remarks.

1.3 Research strategy definition

A systematic review of the literature was conducted using PRISMA guidelines and electronic databases: ieeeXplore1, Scopus2, Sciencedirect3, citepseerx4, and SpringerLink5. A set of keywords was chosen in relation to the remote sensing domain and based on preliminary screening of the research field. The keywords considered in the research initially were as follows: geomatics data, pattern recognition, artificial intelligence, machine learning, neural networks, supervised learning, unsupervised learning, statistical methods, active learning, imbalanced class learning, deep learning, convolutional neural networks, classification, segmentation, detection, pattern recognition, applications, remote sensing data, hyperspectral data, point clouds data, RGB-D data, thermal data, and trajectory.

To obtain more accurate results, the keywords were aggregated. In a set of queries, the keyword geomatics data was combined with others related to the methodologies (ML, DL, and more), and in other sets, remote sensing data were combined with the application (classification or detection). Each query produced a large quantity of articles, which were selected based on their pertinence and year of publication. Articles considered inconsistent with the research topic and published before the year 2016 were removed from the list.

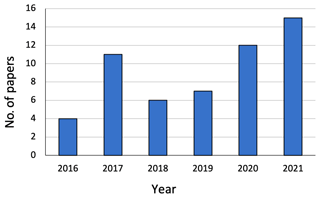

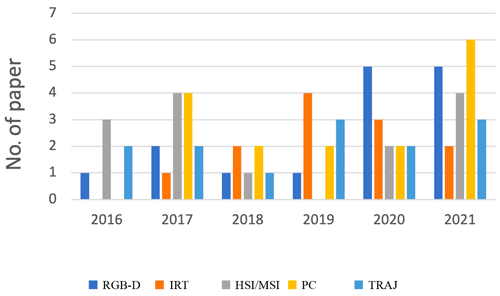

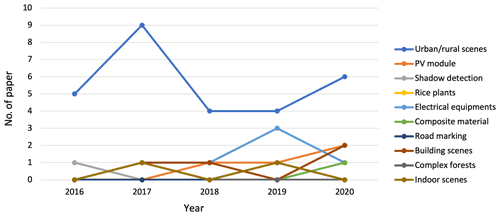

The temporal distribution of works dealing with geomatics data is shown in Figs. 2 and 3. The papers considered for the review were published between the years 2016 and 2021. Figure 2 shows the temporal distribution of works dealing with AI for geomatics data. Figure 3 highlights the number of papers taken into consideration divided by the year of publication and by the type of geomatics data.

This section provides more detail on the articles in which AI algorithms are applied for the management, processing, and interpretation of geomatics data. A set of keywords was used to perform the search phase on the channels listed in Sect. 1.3 and according to the taxonomy designed in Fig. 1. Starting from a brief description of AI algorithms and models, a list of articles was collected respecting the stop criterion described in the search strategy definition. The study aims to classify research published in the field of ML and DL related to several aspects in order to compare these methods and identify their advantages and disadvantages in the application analysis.

2.1 Algorithms and models for GeoAI

AI aims to model the functioning of the human brain and, based on the knowledge acquired, create more advanced algorithms. Data analysis has changed significantly with the emergence of AI and its subsets ML and DL (Paolanti and Frontoni, 2020). Over the past years, ML and feature-based tools were developed with the aim of learning relevant abstractions from data. Nonetheless, after moving into the era of multimedia big data, ML approaches have matured into DL approaches, which are more efficient and powerful to deal with the huge amounts of data generated from modern approaches and cope with the complexities of analysing and interpreting geomatics data. DL has taken key features of the ML model and has even taken it one step further by constantly teaching itself new abilities and adjusting existing ones (LeCun et al., 2015). The most cited definition of ML is by Mitchell: “It is said that a program learns from experience E with reference to certain classes of tasks T and with performance measurement P, if its performance in task T, as measured by P, they improve with experience E” (Mitchell, 1997). In other words, an ML model constantly learns through experience and the rules are not established previously by the programmer, who defines only the features of interest, and then the machine learns by analysing the available data and achieves the results autonomously by making generalisations, classifications, and reformulations. Compared to a traditional approach that consists of identifying a specific function according to which a specific input will always produce a certain output, in the ML generic mathematical and statistical algorithms are used, which, after receiving a series of data through a training phase followed by the evaluation of the results and the optimisation of the parameters, determine the function independently.

DL (Yan et al., 2015; Goodfellow et al., 2016) is a subset of ML that is able to provide high-level abstraction models for a wide range of nonlinear phenomena. The purpose of DL algorithms is to replicate the functioning of the human brain by understanding the path that information takes inside and the way it interprets images and natural language. Therefore, DL architectures have found great application in image classification. In this application we can see the biggest differences between ML and DL. In fact, an ML workflow is started with the manual extraction of significant features from images, so the extracted features allow the creation of a model to categorise objects in the image. Unlike in DL, the feature extraction from images is automatically done and an end-to-end learning is performed in which a network independently learns how to process data and perform an activity. These techniques have resulted in important advances in various disciplines, such as computer vision, natural language processing, facial and speech recognition, and signal analysis in general. DL relies on different models to represent objects. An image, for example, can be processed as a simple vector of numerical samples or with other types of representations. Numerous DL techniques are influenced by neuroscience and are inspired by information processing and communication models of the nervous system, considering the way in which connections are established between neurons based on received messages, neuronal responses, and the characteristics of the connections themselves. DL methods are also able to replace some particularly complex artefacts with algorithmic models of supervised or unsupervised learning through hierarchical characteristic extraction techniques. In fact, they use multiple layers to extract and transform features. Each layer receives the weighted output of a neuron of the previous level. It is therefore possible to switch from the use of low-level parameters to high-level parameters, with the different levels corresponding to different levels of data abstraction. In this way, it is possible to get closer to the semantic meaning of the data and to give them the form of images, sounds, or texts. Several DL architectures, such as deep neural networks, convolutional neural networks, and recurrent neural networks, have been applied to the computer vision, automatic speech recognition, natural language processing, audio recognition, and bioinformatics fields, yielding better performance than ML algorithms in many computer vision tasks (Ongsulee, 2017).

In general, AI-based algorithms, especially deep neural networks (DNNs), are transforming the way of approaching real-world tasks done by humans. Despite applications to problems in geosciences being in their infancy, across the key problems (classification, anomaly detection, regression, space- or time-dependent state prediction) there are promising examples (Zhang et al., 2016; Ball et al., 2017). DNN architectures are increasingly being adopted in geomatics due to their competence to learn relevant abstractions from data. At first, these models were considered “black box” operators, but as their popularity has grown they need to be interpretable and explainable (Xiao et al., 2018; Elhamdadi et al., 2021; Fuhrman et al., 2021). Moreover, deep learning methods are needed to cope with complex statistics, multiple outputs, different noise sources, and high-dimensional spaces. New network topologies that exploit not only local neighbourhood but also long-range relationships are urgently needed, but the exact cause-and-effect relations between variables are not clear in advance and need to be discovered. And more, deep learning models can fit observations very well, but predictions may be physically inconsistent or implausible owing to extrapolation or observational biases. Integration of domain knowledge and achievement of physical consistency by training models about the governing physical rules of geomatics data can provide very strong theoretical constraints on top of the observational ones (Greco et al., 2020).

The main geomatics tasks solved with ML and DL models can be summarised as follows:

-

clustering (Shi and Pun-Cheng, 2019);

-

object detection (K. Li et al., 2020);

-

segmentation (Minaee et al., 2021);

-

part segmentation (Adegun et al., 2018);

-

semantic segmentation (Yuan et al., 2021).

The motivations behind the growing interest by the geoscientific community are numerous. The combination of unprecedented data sources, increased computational power, and recent advances in statistical modelling and machine learning offers exciting new opportunities for expanding our knowledge (Mehonic and Kenyon, 2022; Reichstein et al., 2019).

Clustering is a process of grouping homogeneous elements, based on some characteristics, in a dataset. This operation in everyday life has an unlimited number of applications and is put into practice every time any grouping is carried out (Boongoen and Iam-On, 2018).

The various clustering methods include the following.

-

The first is a connection method, such as linkage, which is a hierarchical method suitable for grouping both variables and observations (single linkage based on the minimum distance, complete linkage based on maximum distance, and average linkage based on average distance).

-

The second is a k-means method, which is a non-hierarchical and vector quantisation method that partitions n observations into k clusters, in which each observation belongs to the cluster with the nearest mean (cluster centres), working as a prototype of the cluster.

-

The last is a spectral cluster, which is an approach with origins in graph theory wherein the method is used to classify communities of nodes in a graph based on the edges connecting them. The process is adaptable and allows clustering non-graph data.

Classification is the process of learning a certain target function f, which maps an input vector x to one of the predefined labels y. The target function is also referred to as the classification model (Tan et al., 2016).

A classification model generated through a learning algorithm must be able to adapt correctly to the input data but also, and more importantly, to correctly predict record class labels that it has never seen before. That is, the key objective of the learning algorithm is to build models with good generalisation skills.

Object detection is an important problem that consists of identifying instances of objects within an image and classifying them as belonging to a certain class (e.g. humans, animals, or cars) (K. Li et al., 2020). The goal is to develop computational techniques and models that provide one of the basic elements necessary for computer vision applications, specifically knowing which objects are in an image. Object detection is the basis of many applications for computer vision, such as instance segmentation, image captioning, and object tracking. From an application point of view, it is possible to group object detection into two categories: “general object detection” and “detection applications” (Liu et al., 2020). For the first, the goal is to investigate methods for identifying different types of objects using a single framework to simulate human vision and cognition. In the second case, we refer to the recognition of objects of a certain class under specific application scenarios: this is the case of applications for pedestrian detection, face detection, or text detection. Currently, the models for object detection can be divided into two macro-categories: two-stage and one-stage detectors. Two-stage models divide the task of identifying objects into several phases, following a “coarse-to-fine” policy. One-stage models complete the recognition process in a single step with the use of a single network.

The problem of image segmentation is a topical research field due to its numerous applications in different fields, from signal processing at the industrial level to the biomedical sector, where it can represent a valid technique for facilitating the reading and quantitative evaluation of the outputs coming from complex diagnostic tools (e.g. magnetic resonance imaging) (Fu and Mui, 1981). Segmentation is the process that divides an image into separate portions (segments) that are groupings of neighbouring pixels that have similar characteristics, such as brightness, colour, and texture. The purpose of segmentation is to automatically extract all the objects of interest contained in an image; it is a complex problem due to the difficult management of the multitude of semantic contents (Sultana et al., 2020).

According to Naha et al. (2020), several recent papers have demonstrated that the use of DL approaches yields very good performance on object part segmentation considering both rigid and non-rigid objects.

As mentioned earlier, an image segmentation model enables partitioning an image into different regions representing the different objects. We talk about semantic segmentation when the model is also able to establish the class for each of the identified regions. In other words, carrying out a semantic segmentation means dividing an image into different sets of pixels that must be appropriately labelled and classified in a specific class of belonging (e.g. animals, humans, buildings).

Semantic segmentation can be a useful alternative to object detection, as it allows the object of interest to cover multiple areas of the image at the pixel level. This technique detects irregularly shaped objects, unlike object detection, whereby objects must fit into a bounding box (Felicetti et al., 2021).

Semantic segmentation of point clouds is also an important step for understanding 3D scenes. For this reason, it has received increasing attention in recent years and a lot of AI approaches have been proposed to automatically identify objects (J. Zhang et al., 2019; Malinverni et al., 2019; Paolanti et al., 2019).

2.2 Geomatics: a fundamental source of data

This section aims to classify the various types of sensors for data acquisition and describe their characteristics. The classification scheme was selected according to the acquisition device and data features, considering the following: (i) the output data structuring, (ii) the active–passive sensors, and (iii) the type of actuation. The main distinction in this review is the type of sensor (i.e. if the acquisition system is supplied with a laser sensor or on a vision sensor, such as a camera). It is fair to state that this is not an exhaustive list of all possible geomatic techniques; rather, it attempts to embrace all the sensors that generate data for which interpretation, given their complexity, requires the aid of statistical learning-based approaches.

A revolutionary turning point in terms of the concept of geomatics was brought by the research paper titled “Geomatics and the New Cyber-Infrastructure” (Blais and Esche, 2008). In that paper, the authors state that geomatics deals with multiresolution geospatial and spatiotemporal information for all kinds of scientific, engineering, and administrative applications. This can be summarised as follows: geomatics is far more than the concept of simply measuring distances and angles. A few decades ago, surveying technology and engineering involved only distance and angle measurements and their reduction to geodetic networks for cadastral and topographical mapping applications. Surveying still plays a leading technological role, but it has evolved in new forms. Topographical mapping, once conducted with bulky instruments requiring complex computations on the part of researchers, has now become a by-product of geospatial or GIS; digital images, obtained with different sensors (from satellite images to smartphones), can be used to accomplish the tasks of both classifying the environment and making virtual reconstructions. Survey networks and photogrammetric adjustment computations have largely been replaced by more sophisticated digital processing with adaptive designs and implementations or ready-to-use equipment, such as terrestrial laser scanners (TLSs). Multiresolution geospatial data (and metadata) refer to the observations and/or measurements at multiple scalar, spectral, and temporal resolutions, such as digital imagery at various pixel sizes and spectral bands that can provide different seasonal coverage.

Analysis tasks can be performed at a regional level thanks to the use of high-resolution images from satellite or aerial images; inferring information is possible through land usage classification, and the shape can be described using ranging techniques like lidar and radar pulse. The possibilities offered by new acquisition devices for dealing with architectural-scale complex objects are numerous. Low-cost equipment (cameras, small drones, depth sensors, and so on) is capable of accomplishing reconstructions tasks. Of course, accuracy must also be considered. In fact, georeferencing complex models require more sophisticated and accurate data sources like a GNSS (Global Navigation Satellite System) receiver or TLS. In the case of small objects or artefacts, terrestrial imagery and close-range data are the best solutions for obtaining detailed information. In the following, we report the main areas of application that are closely related to geomatics, which emerged from the previous analysis: natural environment; quality of life in rural and urban environments; predicting, protecting against, and recovering from natural and human disasters; and archaeological site documentation and preservation. In sum, geomatics can cover the spectrum of almost every scale (Böhler and Heinz, 1999); while there is no panacea, the integration of all these data and techniques is the best solution for 3D surveying, positioning, and feature extraction.

2.2.1 RGB-D cameras

Before Microsoft Kinect was launched in November 2010, collecting images with a depth channel was a burdensome and expensive task. Using depth as an additional channel alongside the RGB input has the scale variance problem present in image-convolution-based approaches. In the last few years, there have been attempts to combine the increasing popularity of depth sensors and the success of learning approaches, such as ML and then DL (Chu et al., 2018; Wang et al., 2021). RGB-D cameras generate a colour representation (red, green, and blue) of a scene and allow reconstruction of a depth map of the scene itself (Han et al., 2013; Liciotti et al., 2017). The depth map is an image M of M×N dimension, in which each pixel p(x,y) represents the distance in the 3D scene of the point (x,y) from the sensor that generated it (Fu et al., 2020; Jamiruddin et al., 2018). The use of depth images compared to RGB or BW (black and white) images provides information about the third dimension and simplifies many computer vision and interaction problems, such as (i) background removal and scene segmentation, (ii) tracking of objects and people, (iii) 3D reconstruction of the environment, (iv) recognition of body poses, and (v) implementation of gesture-based interfaces (Han et al., 2013). To determine the depth map, the considered devices use a pattern projection technique. This involves a stereo vision system consisting of a projector and camera pair to define an active triangulation process.

For a semantic segmentation task involving urban–rural scenes, the work of (L. Li et al., 2017) proposes a method based on RGB-D images of traffic scenes and DL. They use a new deep fully convolutional neural network architecture based on modifying the AlexNet (Krizhevsky et al., 2012) network for semantic pixel-wise segmentation. The RGB-D dataset is built by the cityscapes dataset (Cordts et al., 2016), which comprises a large and diverse set of stereo video sequences of outdoor traffic scenes from 50 different cities. The original AlexNet is modified since they perform a batch normalisation operation on the output of each convolutional layer, and during the experimental phase, they find that this modification improves the segmentation accuracy. The modified version of AlexNet is used as the encoder network of the architecture. During the test, they evaluate the semantic segmentation performance of the proposed architecture, comparing the results obtained with RGB-D images as input and only RGB images as input. The experimental results show that the use of the disparity map increases the semantic segmentation accuracy, achieving good real-time performance.

To semantically segment RGB-D frames collected in commercial buildings and to recognise all component classes of buildings, a DL artificial neural network method is used in Czerniawski and Leite (2020). The purpose is to demonstrate that the proposed method can semantically segment RGB-D images into 13 classes of components even if the training dataset is very small. The dataset was purposely built and manually annotated using a common building taxonomy to provide complete semantic coverage. The supervised neural network used is DeepLab (Chen et al., 2017), a state-of-the-art model for semantic segmentation of images that assigns a semantic label to each pixel of the image. To demonstrate the validity of the approach, the authors compare the performance with several state-of-the-art DL methods used for building object recognition.

Finally, RGB-D images have been exploited to fulfil localisation tasks (Zhang et al., 2021) and 3D object part segmentation (Zhuang et al., 2021).

2.2.2 Infrared cameras

Thermography, or thermovision, is a non-invasive, simple, and precise investigation system that provides real-time infrared images of any object opaque to this radiation, allowing the visualisation (and quantitative representation) of its surface temperature (Gade and Moeslund, 2014). The images are usually represented in false colour scales, in which a certain colour corresponds to a certain temperature and is not the real colour of the object.

Infrared thermography (IRT) is a well-known method of examination, which is useful because it is safe, painless, non-invasive, easy to reproduce, and has low running costs. IRT combined with AI-based automated image processing can easily detect and analyse damage or other failures in images (Kandeal et al., 2021). Despite the literature proposing approaches based on single RGB data (Espinosa et al., 2020), IRT images proved to be more reliable.

The classification of defects in thermal images through an initial prevention mechanism is dealt with in the work of Ullah et al. (2017), which uses an artificial neural network architecture, specifically multi-layered perceptron (MLP), for this task. The system classifies the thermal conditions of components into two classes: “defect” and “non-defect”. They initially extract statistical first- and second-order features departing from thermal sample images. To increase the classification performance, they augment MLP with the graph cut, obtaining better performance in the identification of defects and the classification of the images.

The same application is considered in the paper of Nasiri et al. (2019), in which the authors propose a convolutional neural network architecture to automatically detect faults and monitor equipment operations of a cooling radiator. They consider infrared thermal images and a DL architecture that has the task of feature extraction and classification of six conditions of the radiator. The architecture is constructed based on a VGG-16 structure, followed by batch normalisation, dropout, and dense layers. During the experimental phase, they compare the classification performance with other traditional artificial neural networks, demonstrating high performance and accuracy in various working conditions. In the work of Ullah et al. (2020), a novel model is proposed that detects an increase in temperature in high-voltage electrical instruments to promptly intervene to avoid equipment failure that could damage the system. Any anomalies must be detected and eliminated. In this context, the authors identify faults and anomalies in IRT images using a combined DL architecture. The infrared thermal images are the input of a convolutional neural network for the feature extraction task. Then, the features vector is the input of five different ML models (RF, SVM, J48, NB, BayesNet), which are selected to categorise the performance in the classification task into defective and non-defective classes. The experimental results demonstrate that the best classifier is the RF classifier, which is the best for discriminating the binary classification.

Classification of faults in electrical equipment is considered in the work of Duan et al. (2019). They use an artificial neural network to automatically classify defects as water, oil, and air, which can reduce the performance of some materials. Through a quantitative comparison, they demonstrate that the approach that uses coefficients as features provides better performance than the one using raw data.

Finally, another interesting method is proposed by Chellamuthu and Sekaran (2019), which uses a deep neural network to classify parts of infrared images into two classes: defect and non-defect. They intend to evaluate and monitor the parts of electrical equipment to identify thermal defects at an early stage in order to promptly intervene to avoid worse damage. First, the segmented thermal images are considered. Then, based on the optimal features, the feature extraction procedure follows. The optimal feature extraction is obtained using the Opposition-based Dragonfly Algorithm (ODA). The experimental results demonstrate that the approach provides better accuracy in performance than other classification methods.

Defect detection in infrared images of photovoltaic (PV) modules is addressed in the works of Akram et al. (2020), Pierdicca et al. (2018), and Luo et al. (2019). The increase in the number of PV installations makes automatic monitoring methods important since manual and visual inspection has several limitations. In this context, these works propose a method based on a DL algorithm that can automatically identify defects in infrared images on PV modules. The main approaches used are visual geometry group-Unet (VGG-Unet) and mask region-based convolutional neural network (Mask R-CNN) architecture that simultaneously performs object detection and instance segmentation (Pierdicca et al., 2020a).

Considering the high performance in object detection achieved by YOLO (You Only Look Once) (Redmon et al., 2016), the authors in Tajwar et al. (2021) developed a tool for hotspot detection of PV modules using YOLO. Firstly, the IRT images were converted into a dataset for a classifier to detect the hotspot of PV modules. Then the learner is trained and tested with the dataset. After that, the output validates with the IRT images of PV modules. The same deep learning model choice was also adopted in Greco et al. (2020) for addressing the problem of PV panel detection.

PV module faults are also classified in the work of X. Li et al. (2018), which aims to propose a new method for automatically classifying defects in infrared thermal images.

Defect detection is the focus of the work of Gong et al. (2018), in which the authors aim to identify anomalies in electrical equipment by implementing a model based on DL. The implemented defect identification models are InceptionV2 and Inception Resnet V2. The performance of the method is also evaluated for infrared images with artificial defects.

Finally, IRT images are also used to detect faults in infrared thermal images of composite materials used in aircraft, vehicles, and several industries by exploiting their mechanical properties (Bang et al., 2020) and building monitoring (Al-Habaibeh et al., 2021).

2.2.3 Digital photogrammetry and terrestrial laser scanning

Photogrammetry is a technique that enables metrically determining the shape, size, and position of an object having two distinct photographic frames that should be central projections of the object itself (Baqersad et al., 2017). Also, 3D laser scanning technology (Lemmens, 2011) has been widely used in the engineering and construction industries. 3D laser scanners work on the principles of lidar (light detecting and ranging) by emitting a laser pulse, which hits a target and subsequently returns to the sensor (Liscio et al., 2018; Di Stefano et al., 2021).

The points captured are called a point cloud, which is then exported into laser scanning software that can create fully coloured 3D models that allow for point-to-point measurements and excellent visualisation of the scene.

The use of ML and DL techniques for point cloud classification and semantic segmentation was successfully investigated in the last decade in the geospatial environment (Weinmann et al., 2015; Qi et al., 2017a; Özdemir and Remondino, 2019). Several methods have been recently proposed (Shen et al., 2021; Xiao et al., 2021; Geng et al., 2021), and in the following a detailed review of the main approaches in the geomatics field is reported.

The pioneer DL algorithm that processes 3D point clouds is in Qi et al. (2017a). It automatically classifies and performs the semantic segmentation directly on the point clouds. They consider an architecture that first analyses the features of the single points and then identifies them globally. However, this architecture does not capture local geometries, so optimisation of this methodology is presented in Qi et al. (2017b). In this paper, to learn local features by exploiting the metric space distances, a hierarchical grouping is considered. For local neighbourhoods, the experimental phase shows improved results compared with other state-of-the-art architectures.

To handle 3D point clouds with spectral information acquired by lidar systems, the work presented by Yousefhussien et al. (2018) uses a method based on DL algorithms. They propose a modified version of PointNet (Qi et al., 2017a) to obtain a model able to operate with complex 3D data acquired from overhead remote sensing platforms using a multi-scale approach. Their DL network can directly deal with unordered and unstructured point clouds without modifying the representation and losing information. Moreover, to demonstrate the accuracy of their method, they present a performance comparison with other state-of-the-art methods. Papers like Zhang and Zhang (2017), Wang and Ji (2021), and Lee et al. (2021) make extensive use of approaches based on DL for semantic parsing of 3D point clouds of urban building scenes.

In Zhang et al. (2018), the problem of semantic segmentation of 3D scenes on a large scale is tackled by considering a fusion between 2D images and 3D point clouds. The authors create a Deeplab-Vgg16 high-resolution model (DVLSHR) based on Deeplab-Vgg16 and the Deep Visual Geometry Group (VGG16), which is successfully optimised by training seven deep convolutional neural networks on four reference datasets. The preliminary segmentation is made using 2D images, which are then mapped into 3D point clouds, taking into account the relationships among the images and the point clouds. Subsequently, based on the mapping, the physical planes of buildings are extracted from the 3D point clouds.

In the field of digital cultural heritage (DCH), the work of Pierdicca et al. (2020b) uses an improved version of DGCNN (Wang et al., 2019) that adds meaningful features, such as normal and colour. The aim is to semantically segment 3D point clouds to automatically interpret the architectural parts of buildings and obtain a useful framework for documenting monuments and sites. They use a novel dataset comprising both indoor and outdoor scenes, which are manually labelled by experts and which belong to different historical periods and styles (Matrone et al., 2020b). Extensive experiments on the purposely created dataset show the efficiency of the optimised architecture, and the results are compared with those of other state-of-the-art models. The authors have also extended the proposed approach by comparing the DL approach with an ML-based one and by the improvement of DGCNN with other relevant features (Matrone et al., 2020a).

A DL-based framework for automatically extracting, classifying, and completing road markings from three-dimensional mobile laser scanning (MLS) point clouds is presented by Wen et al. (2019). A modified version of the UNet architecture is used to extract road markings. For classification, a method based on clustering and convolutional neural networks is developed, and it is more efficient with different sizes. Finally, to complete the road marking, a method based on a conditional generative adversarial network (cGAN) is used, which is more effective since it considers the continuity and regularity of the lane lines. The dataset consists of three scenes: highways, urban roads, and underground parking, with raw point clouds and labelled road marking ground truths.

In the context of urban and rural scenes, the paper of Yang et al. (2017) proposes a method for semantically labelling 3D point clouds acquired by an airborne laser scanner using an approach based on DL. A point-based feature image generation method extracts local geometric features, global geometric features, and full-waveform features from 3D point clouds, transforming them into an image. Then, the feature images are the input of a convolutional neural network for semantic labelling. Finally, to compare the performance of the proposed approach with state-of-the-art methods, they test the framework using other publicly available datasets, achieving a high level of overall accuracy with the proposed network.

To solve a similar issue, the papers of Wang et al. (2019) and Can et al. (2021) use a novel convolutional neural network called Dynamic Graph CNN (DGCNN), which includes a new module called EdgeConv that acts on graphs dynamically computed in each layer of the network. The EdgeConv module incorporates local neighbourhood information, can be applied to learn global shape properties, and captures semantic characteristics in the original embedding. To demonstrate the performance of the proposed model, the authors use different public datasets: ModelNet40, ShapeNetPart, and S3DIS. Moreover, they compare the results with other models based on DL, obtaining better results in terms of accuracy.

To minimise the large number of point clouds needed to classify urban objects, a solution is proposed by Balado et al. (2020). The problem that they intend to address is in Balado et al. (2020). They use convolutional neural networks to convert point clouds into personal computer (PC) images, taking into account that acquiring and labelling point clouds is more expensive and time-consuming than the corresponding image. They generate several sample images per object (point clouds) using multi-view and combine PC images with images derived from online datasets: ImageNet and Google Images. The DL algorithm chosen is InceptionV3. To validate the proposed methodology, they also consider the IQmulus & TerraMobilita Contest dataset, obtaining correct classification with few samples.

Complex forest scenes represented by 3D point clouds are classified using a method based on DL in the work of Zou et al. (2017). A new voxel-based DL method classifies species of trees using 3D point clouds of forests as input and consisting of three phases: individual tree extraction, feature extraction, and classification using DL. Moreover, two different datasets acquired using terrestrial laser scanning systems are used. Then, to evaluate the performance and demonstrate the effectiveness of the proposed method, they also compare it with other classification methods for 3D tree species. Other interesting works worth mentioning in this field are Chen et al. (2021) and Pang et al. (2021).

2.2.4 Remote sensing: multispectral and hyperspectral data

Remote sensing (Toth and Jóźków, 2016) is a technical and scientific discipline that allows obtaining quantitative and qualitative information and measuring the emission, transmission, and reflection of electromagnetic radiation from surfaces and bodies placed at a long high distance from an observer. Recently, ML approaches as part of the AI domain and its DL subset have become increasingly important in MSI and HSI remote sensing analysis (Yuan et al., 2021; Zang et al., 2021). Several works have been proposed with the aim of expediting time-consuming processes (Zhu et al., 2017).

In the following, different papers are presented to solve the classification task of HSI–MSI images of urban and rural scenes, mainly using DL algorithms.

The only paper considered that uses an approach based on ML is Sharma et al. (2017). The aim is to evaluate the performance of different supervised ML classifiers in the discrimination of six vegetation physiognomic classes. They use supervised approaches with different model parameters and demonstrate that the random forests classifier provides the greatest accuracy and kappa coefficient.

The work of Zhong et al. (2017) proposes a system that classifies hyperspectral images using a supervised model based on DL. The input of the Spectral–Spatial Residual Network (SSRN) is represented by 3D raw cubes. Through identity mapping, each of the 3D convolutional layers is connected by the residual blocks. Then, to improve the classification accuracy and the learning process, a batch normalisation algorithm is used on each convolutional layer. The dataset is made up of agricultural, rural–urban, and urban hyperspectral images. The qualitative and quantitative experimental results indicate that the proposed framework achieves good classification accuracy. Many other papers adopt similar approaches, like Mendili et al. (2020) for LC–LU classification, Audebert et al. (2018) for semantic labelling, shadow detection in Movia et al. (2016), and precision farming in Zheng et al. (2020).

To deal with the hyperspectral image classification problem, Yang et al. (2018b) present a method for increasing the classification performance by exploiting both the spatial context and spectral correlation, although in general only the spatial context is considered. Specifically, they consider and evaluate the performance of four convolutional neural networks: 2DCNN, 3DCNN, recurrent 2DCNN, and recurrent 3DCNN. Six open-access datasets are used for classification. Moreover, to demonstrate that DL methods provide better performance in the classification task, four architectures are compared with other traditional methods.

In addition, Wu and Prasad (2017) propose a method for classifying hyperspectral images using DL methods. They highlight the need to have a large amount of labelled data for training, and to solve this problem they propose a semi-supervised DL approach that requires limited labelled data and a large amount of unlabelled data, which they use with their pseudo-labels (cluster labels) to pre-train a deep convolutional recurrent neural network that they fine-tune using a smaller amount of labelled data. Moreover, to use spatial information they implement a constrained Dirichlet process mixture model (C-DPMM) for semi-supervised clustering, also deriving a variational inference model.

The paper of Zhao and Du (2016) proposes a novel classification framework based on a spectral–spatial feature (SSFC) that uses dimension reduction and DL methods to extract spectral and spatial features, respectively. Spectral feature extraction is applied to high-dimensional hyperspectral images using a local discriminant algorithm, while a convolutional neural network is implemented to determine high-level spatial features. Finally, the multiple features extracted jointly considering spectral and spatial features are used to train the multiple-feature-based classifier for image classification. To demonstrate the performance of the SSFC classifier, they compare the results with those of other traditional classification methods.

A target detection for hyperspectral images using a deep convolutional neural network is proposed in W. Li et al. (2017). To train this multi-layer network, a high number of labelled samples is needed, but for target detection, few labelled targets are available. Hence, to enlarge the dataset, they further generate pixel pairs. In the experimental phase, two cases are considered: in the first, for anomaly detection, using similarity measurements, a convolutional neural network classifies different pixel pairs obtained by combining the centre pixel and its surrounding pixels; in the second, for supervised target detection, a convolutional neural network classifies different pixel pairs obtained by combining the testing pixel and the known spectral signatures.

The aim of Liu et al. (2016) is the classification of hyperspectral images using active DL. As obtaining well-labelled samples for remote sensing applications is very expensive, they consider weighted incremental dictionary learning. The algorithm selects samples by maximising two selection criteria: representativeness and uncertainty. Moreover, the network is actively trained to select training samples in each iteration. To validate the proposed architecture, during the experimental phase they compare the performance with other classification algorithms that use active learning.

In Chen et al. (2016), the argument concerns the classification task of hyperspectral data. The authors propose a DL approach to elaborate hyperspectral images. In particular, they combine a novel feature extraction (FE) and image classification architecture based on a deep belief network (DBN) to obtain high classification accuracy. During the experimental phase, they demonstrate that the framework provides encouraging classification results compared with other state-of-art methods. Moreover, they demonstrate the great potential of DL methods for classifying hyperspectral images, even confirmed in more recent works (Xu et al., 2021).

The paper proposed by Hong et al. (2020b) aims to demonstrate that the use of a framework based on DL, in particular a cross-modal DL framework called X-ModalNet, provides good results for classification tasks of multispectral imagery (MSI) and synthetic aperture radar (SAR) data. The architecture consists of three well-designed modules: a self-adversarial module, an interactive learning module, and a label propagation module. During the experimental phase, the authors compare the classification performance with other state-of-the-art methods, demonstrating significant improvement.

In the paper of Hong et al. (2020a), a framework based on DL is presented to classify hyperspectral data. In particular, convolutional neural networks and graph convolutional networks are used to classify hyperspectral images. The authors develop a new minibatch graph convolutional network to solve the problem of huge computational costs in large-scale remote sensing problems. The mini-graph convolutional network infers out-of-sample data without the need to retrain the networks and improves the classification performance. Since convolutional and graph convolutional networks extract different types of features, they are fused based on three strategies (additive fusion, element-wise multiplicative fusion, and concatenation fusion) to increase classification performance. The experimental results from three different datasets demonstrate that the use of mini-graph convolutional networks provides better performance than graph convolutional networks as well as combined convolutional and graph convolutional GCN models.

The work presented by Y. Li et al. (2019) is worth mentioning, which detects changing in synthetic aperture radar (SAR) images. The authors use a DL architecture, specifically a convolutional neural network trained to obtain a classifier able to distinguish modified pixels from unmodified pixels. This task is very important when disasters occur where it is difficult to obtain prior knowledge. To address this issue, they modify a supervised training process into an unsupervised learning process. Moreover, this method does not require image preprocessing and a filtering operation for SAR images. A convolutional neural network makes use of the spatial feature and neighbourhood information on pixels to learn the hierarchical features of the images and implement an end-to-end framework.

2.2.5 GNSS positioning

The GNSS (Global Navigation Satellite System) is a positioning system based on the reception of radio signals transmitted by various constellations of artificial satellites (Groves, 2015). Modern GPS receivers have achieved very low costs. The market now offers low-cost solutions for all uses, which are effective not only for satellite navigation but also for civil uses, monitoring mobile services, and territorial control. Consequently, trajectory forecasting has been a field of active research owing to its numerous real-world applications, thanks to the ever-increasing availability of GNSS data, for both pedestrians (Kothari et al., 2021) and vehicles (Siddique and Afanasyev, 2021).

The aim of the paper of Endo et al. (2016) is to address the problem of extracting the characteristics that estimate users' transport modes based on their movement trajectories. To compensate for a lack of handcrafted functionality, they propose a method that automatically extracts additional functionality using a deep neural network. A classification model is constructed in a supervised manner using both deep and handcrafted characteristics. The effectiveness of the proposed method is demonstrated through several experiments using two real datasets, comparing the accuracy with that of previous methods.

Another paper (Habtemichael and Cetin, 2016) presents a nonparametric, data-driven methodology for short-term traffic prediction based on recognising similar traffic patterns, employing an advanced K-closer algorithm. Additionally, winsorisation of neighbours is implemented to reduce the consequences of predominant candidates, and the rank exponent is applied to aggregate candidate values. The robustness of the proposed method is demonstrated by implementing it on large datasets derived from different regions and comparing the performance with advanced time series models, such as the SARIMA and Kalman filter adaptive models proposed by others. Furthermore, the effectiveness of the proposed advanced K-nearest neighbour (k-NN) algorithm is evaluated for multiple prediction stages, and its performance is also tested with data with missing values. This study provides strong evidence showing the promise of a nonparametric, data-driven method for short-term traffic prediction.

Obtaining knowledge from the GPS tracks of human actions is the topic of the work of Jiang et al. (2017). The authors present TrajectoryNet, a neural network architecture for point-based trajectory classification to infer real-world human transport modes from GPS tracks. A new representation is developed that includes the original feature space into another space, which can be recognised as a form of base expansion, to overcome the challenge of capturing the underlying latent factors in the low-dimensional and heterogeneous feature space imposed by GPS data. A classification accuracy greater than 98 % is achieved for identifying four types of transport modes, which exceeds the performance of existing models without further sensory data or prior knowledge of the location.

According to Xiao et al. (2017), transport mode identification can be used in a variety of applications, including human behaviour research, transport management, and traffic control (Yang et al., 2021). In this paper, a learning-set-based method is presented to infer hybrid modes of transport employing only GPS data. First, to distinguish between diverse modes of transport, a statistical approach is used to produce global features and extract different local features from sub-trajectories after trajectory segmentation before these features are combined in the classification step. Second, to obtain better performance, tree-based ensemble models (random forest, gradient boosting decision tree, and XGBoost) are used instead of traditional methods (K-nearest neighbour, decision tree, and support vector machines) to classify the different transport mode tools.

Correct detection in public transport modes is a fundamental task in smart transport systems according to James (2020). Hence, the aim is to utilise GPS trajectories of random lengths to produce efficient travel mode results in global and online classification scenarios. Raw GPS data are processed to calculate preliminary movement and displacement properties, which are fed into a tailored deep neural network. The results show that the approach can significantly exceed state-of-art travel mode identifications with the same dataset with little computation time. Moreover, an architecture test is performed to determine the best-performing structure for the proposed mechanism.

According to the work of Dabiri et al. (2019), recognising passenger transport modes is important for many issues in the transport field, such as travel demand analysis, transport planning, and traffic management. The paper aims to classify travellers' modes of transport based only on their GPS trajectories. First, a segmentation process is developed to classify a user's journey into GPS segments with only one mode of transport. Most researchers have suggested modality inference models based on hand-built functionality, which can be vulnerable to traffic and environmental conditions. SECA combines a convolutional–deconvolutional auto-encoder and a convolutional neural network into architecture to perform supervised and unsupervised learning simultaneously.

In another paper (Dabiri et al., 2020), the same authors consider the fact that transportation agencies are beginning to leverage the more available GPS trajectory data to support their analyses and decision-making. Although this representation of mobility data adds meaningful value to several analyses, a challenge is the lack of knowledge regarding the kinds of vehicles that produced the recorded tours, which restricts the value of the trajectory data in the transport system analysis. The paper presents a new design of GPS trajectories, which is compatible with deep learning models and also obtains vehicle movement features and road features. To this end, an open-source navigation system is also applied to obtain more detailed information on travel time and the distance between GPS coordinates. The experimental phase shows that the proposed CNN-VC model consistently outperforms both classical ML algorithms and other essential DL methods.

R. Zhang et al. (2019) consider that, although some studies on the classification of trajectories have been conducted, they require manual selection of characteristics or fail to completely consider the influence of time and space on the classification results. The features obtained are joined to provide the results of the final classification. Then, they present an approach based on the latest DenseNet image classification network structure and include the attention tool and residual learning. This model can fully extract spatial features to increase feature propagation and capture long-term dependence. The results show that the design outperforms traditional models in terms of accuracy, recall, and f1 score (the harmonic mean of precision and recall) .

Duan et al. (2018) consider the nonlinear and space–time characteristics of urban traffic data, proposing a deep hybrid neural network enhanced by a greedy algorithm for the prediction of urban traffic flow using GPS tracking of taxis. They propose a deep neural network model that combines a convolutional neural network, which extracts spatial features with long-term memory that captures temporal information, to predict the flow of urban traffic. Experimental results based on real taxi GPS trajectory data from the city of Xian show that the enhanced deep hybrid CNN-LSTM model has higher classification accuracy and requires a shorter time than traditional methods.

Finally, based on GPS data the work presented in Pierdicca et al. (2019b) shows that the case of urban parks is difficult, requiring knowledge of many variables, which are difficult to consider simultaneously. One of these variables is the set of people who use the parks. This study aims to produce a method to identify how an urban green park is used by its visitors to provide planners and managing authorities with a standardised method. A trajectory classification algorithm is implemented to understand the most common visitor trajectories by obtaining the advantages of GPS and sensor-based traces. Based on these user-generated data, the proposed data-driven approach can determine the park's mission by processing visitor trajectories while using a mobile application specifically designed for this purpose.

As mentioned previously, the use of AI in geomatics data management is not a new problem. Several studies have been conducted on this topic, and many are currently in development. Geomatics data are the core of several applications in which ML and DL have been applied.

The use of geographical and spatial information within society as well as in academic work has increased rapidly in recent decades. This also means that geomatics has started to create problems in both the academic and non-academic worlds. First, it bridges borders that have been in place for a long time. Second, geomatics, or rather the basic concepts of geomatics, are increasingly being used. Spatial analysis has proven to be important in all disciplines. We can find examples of strong GIS units in, for example, humanities (archaeology, human ecology, language studies, etc.), social science (human and economic geography, economy, economic history, etc.), and medicine (social and occupational medicine, epidemiology, etc.). Thus, geomatics are part of research in most disciplines, and many users are facing issues related to the integration of geomatics in their field. Geomatics are also used frequently in interdisciplinary settings, which leads to specific issues.

We close this paper by returning briefly to the questions raised at the beginning, which remain largely open.

Comparing ML and DL, which is the most commonly used in geomatics?

First of all, it is necessary to clarify that DL is a type of ML approach; in the following we compare DL and ML, and we distinguish approaches that use DL from those that use ML except DL.

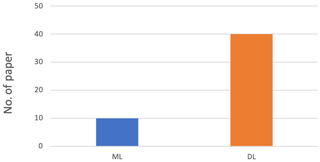

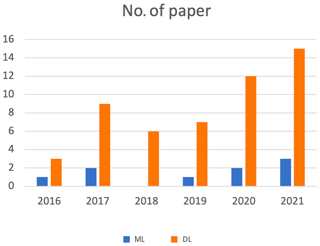

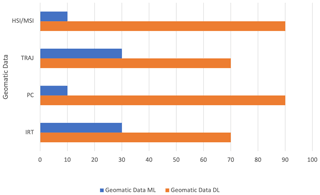

The comparison between ML and DL methods is shown in Figs. 4 and 5. Figure 4 shows that the most commonly used method, especially in the last years, is DL, with an average rate of 80 % compared to a rate of 20 % for ML.

Figure 5 compares the two methodologies during the time interval considered, confirming that there is greater use of DL than ML to deal with geomatics data in the period taken into consideration.

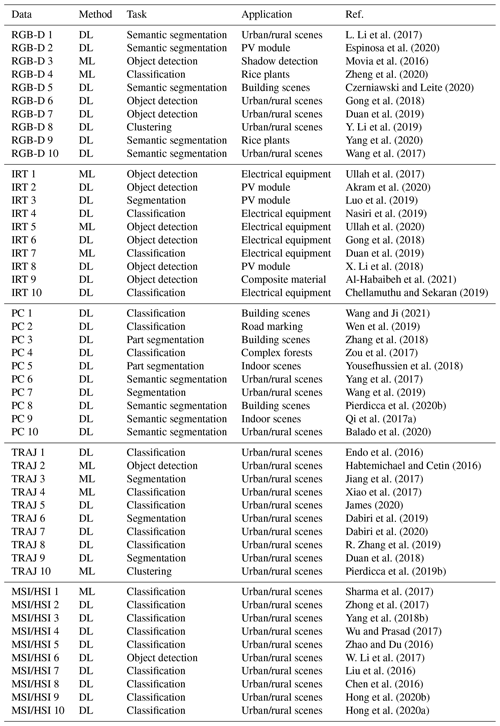

Table 1 summarises 10 of the applications reviewed for each kind of data, comparing the input, the task, and the AI method chosen.

L. Li et al. (2017)Espinosa et al. (2020)Movia et al. (2016)Zheng et al. (2020)Czerniawski and Leite (2020)Gong et al. (2018)Duan et al. (2019)Y. Li et al. (2019)Yang et al. (2020)Wang et al. (2017)Ullah et al. (2017)Akram et al. (2020)Luo et al. (2019)Nasiri et al. (2019)Ullah et al. (2020)Gong et al. (2018)Duan et al. (2019)X. Li et al. (2018)Al-Habaibeh et al. (2021)Chellamuthu and Sekaran (2019)Wang and Ji (2021)Wen et al. (2019)Zhang et al. (2018)Zou et al. (2017)Yousefhussien et al. (2018)Yang et al. (2017)Wang et al. (2019)Pierdicca et al. (2020b)Qi et al. (2017a)Balado et al. (2020)Endo et al. (2016)Habtemichael and Cetin (2016)Jiang et al. (2017)Xiao et al. (2017)James (2020)Dabiri et al. (2019)Dabiri et al. (2020)R. Zhang et al. (2019)Duan et al. (2018)Pierdicca et al. (2019b)Sharma et al. (2017)Zhong et al. (2017)Yang et al. (2018b)Wu and Prasad (2017)Zhao and Du (2016)W. Li et al. (2017)Liu et al. (2016)Chen et al. (2016)Hong et al. (2020b)Hong et al. (2020a)Do geomatics data influence the choice of using one methodology rather than another?

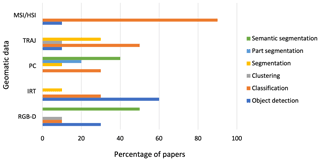

Figure 6 shows the results in percentage terms. In the graphs, we have grouped the papers on geomatics data and the employed approach. For all data, the use of DL is gaining increasing importance, especially in point cloud semantic segmentation and classification. While for IRT data the use of DL techniques is slightly lower than the other data we considered in this work, this probably depends on the technical and physical characteristics of the IRT data. Thus, the use of one technology rather than another also depends on the type of data processed. From this analysis, it is also possible to answer RQ1, as the data demonstrate the trend in preferring DL approaches rather than ML ones.

For which tasks are geomatics data used?

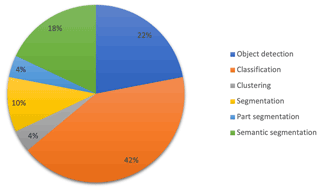

The main tasks performed using geomatics data are shown in Figs. 7 and 8. Observing Fig. 7, the classification task is the most commonly employed, with a rate of 42 %. The object detection task is employed 22 % of the time, and the semantic segmentation task has a rate of 18 %. The remaining 18 % is segmentation, part segmentation, and clustering. These results involve all geomatics data considered in this review. These data, which have different characteristics mainly due to the type of acquisition, are used in tasks that can be included in the three identified categories.

Figure 8Comparison between geomatics data and AI-based tasks. The percentage of papers follow the criteria of matching, for each kind of data, the type of AI approach used.

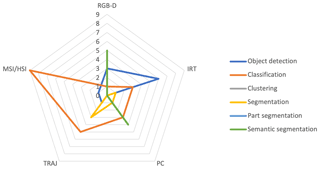

Figure 8 considers the task referring to all types of data. Classification is the task mostly employed with HSI and MSI data, and object detection seems to be the preferred solution when dealing with both IRT and RDB-D images. Meanwhile, the point cloud data, confirming the trend from the literature review, are mainly used for semantic segmentation (with a rate of 40 %), classification (with a rate of 30 %), and part segmentation (with a rate of 20 %) tasks. The object detection task is not executed. On the contrary, the main task for IRT data is object detection with a rate of 60 %, then classification with a rate of 30 %, and finally segmentation with a low rate (10 %). Classification and segmentation are the main tasks for the trajectory data. Other tasks are clustering and object detection with the same rate (10 %).

This analysis has been fundamental to answering RQ2. Indeed, the AI approach is strictly connected with the kind of data, thus depending on the domain in which the approach is applied (see Sect. 3).

Are there relationships between application domains and geomatics data?

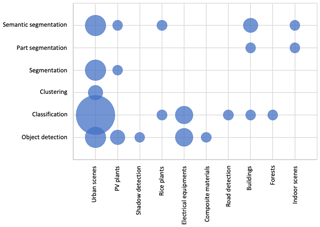

Figures 9 and 10 answer RQ3 and RQ4, which seek to establish whether a relationship exists between the data type and application domains.

Taking into account the research mentioned in this paper, we have identified 10 different aspects (urban–rural scenes, PV module, shadow detection, rice plants, electrical equipment, composite material, road marking, building scenes, complex forests, and indoor scenes).

The analysis shown in Fig. 9 considers each AI-based task based on the application domain. This graph directly comprises the application domain and geomatics data. RGB-D and PC data are most commonly used in different domains, although RGB-D data are most commonly used in urban–rural scenes. It is fair to say that a clear subdivision among the countless application domains in geomatic is impervious; notwithstanding, Fig. 9 highlights the fact that clustering and classification tasks are currently performing best in urban scenes, maybe due to the vast use of geomatic data in such environments. PV plant applications, however, are explored, indicating that AI approaches might be very useful for decision-making in environmental applications, as PV plants are. The remaining data are sparse, highlighting the need for future investigations to outline a straightforward line of research.

The analysis in Fig. 10 raises an additional question: does the application domain change over the years? We can confirm that there is no relation between the application domain and the year, although there is an increase in the application of urban–rural scenes, mainly due to the type of associated data.

Notwithstanding the success of AI in the geomatics, important caveats and limitations have hampered its wider adoption and impact. Figure 11 presents a radar chart that considers the tasks based on the kind of data. This summarises the choice of task with available geomatics data.

Figure 11Spider chart representing the distribution of AI-based approaches in relation to the specific geomatics data.

Exploiting AI for the interpretation of complex geomatics data comes with many challenges, including the variability of the data source, the management of heterogeneous data, the different scales of representation, and the purpose of data processing. However, the more pronounced challenges related to the application can be categorised as follows.

-

Lack of available dataset. Regardless of the topic and/or the kind of data in the training phase (given the assumption that DL models can be arranged to fit a specific task), there is a lack of available datasets in the literature to be used as benchmarks. The great interest demonstrated by the research community in utilising geomatics data with learning-based approaches is hampered by the scepticism in sharing labelled datasets. It is well known that ML and DL are data-driven techniques that perform better as the number of input samples increases. Attempts to solve this problem have involved the generation of synthetic datasets (Pierdicca et al., 2019a; Morbidoni et al., 2020). Recently, generative models have proven to be effective for this task. Generative adversarial networks (GANs) are an appealing DL approach developed in 2014 by Goodfellow et al. (2014). GANs are an unsupervised deep learning approach in which two neural networks challenge each other, and each of the two networks improves at its given task with each iteration. For the image generation issue, the generator begins with Gaussian noise to generate images, and the discriminator determines how valuable the generated images are. This process proceeds until the generator development of outputs. GANs have been used to generate artificial images and videos as well as to generate point clouds (Vondrick et al., 2016; Sun et al., 2020; Rossi et al., 2021). Despite exceptional results in supervised learning since the DL developments, collecting enough data to train the models remains a challenge, and some methods have been developed to train models with little or no data. Zero-shot learning (ZSL) is the task of training a model in some (seen) classes and testing it in other (unseen) classes. Good results have been achieved in ZSL, especially with the adoption of generative methods, but it is unclear whether these results are generalisable to the real world. Moreover, self-supervision as an auxiliary task to the main supervised few-shot learning is considered to be an equivalent method to learning a transferable feature representation from limited examples, since self-supervision can contribute to additional structural information easily ignored by the main task.

-

Domain-dependent models. Regarding its respective geomatics compartment, when there is no all-in-one solution for every task, each AI-based model should be chosen according to the task one is attempting to solve. In other words, as AI improves, the need has emerged to understand how to make such models effective, choosing them according to the kind of data for which they have been designed. Integrating the knowledge of domain experts into AI models increases the reliability and the robustness of algorithms, making decisions more accurate. Moreover, the knowledge acquired for one task can be used to solve related ones thanks to transferring learning strategies. Transfer learning allows leveraging knowledge (such as features and weights) from previously trained AI models for training newer models and even tackling problems like having less data for the newer task. Future models should integrate process-based and machine learning approaches. Data-driven machine learning approaches to geoscientific research will not replace physical modelling but strongly complement and enrich it. Specifically, synergies between physical and data-driven models are needed, with the ultimate goal of hybrid modelling approaches. Importantly, machine learning research will benefit from plausible physically based relationships derived from natural phenomena.

-

Data preprocessing. Broadly speaking, geomatics data have intrinsic features that make them very challenging for DL, especially convolutional neural networks. The reason for this is that AI is intended to utilise data that are ordered, regular, and on a structured grid. This means that data should be ordered, and pre-processing operations are still time-consuming. This represents one of the main bottlenecks, as it requires the presence of an expert for every single application domain.

-

Hardware limitations. Despite the growing computational capabilities of better-performing CPUs and the advances in distributed and parallel high-performance computing (HPC), the computational costs of the above-mentioned tasks remain high. We are not still at a stage where the ratio between time gained and resources spent is in balance, making the use of AI-based methods unhelpful at times compared with time-consuming but more affordable manual solutions.